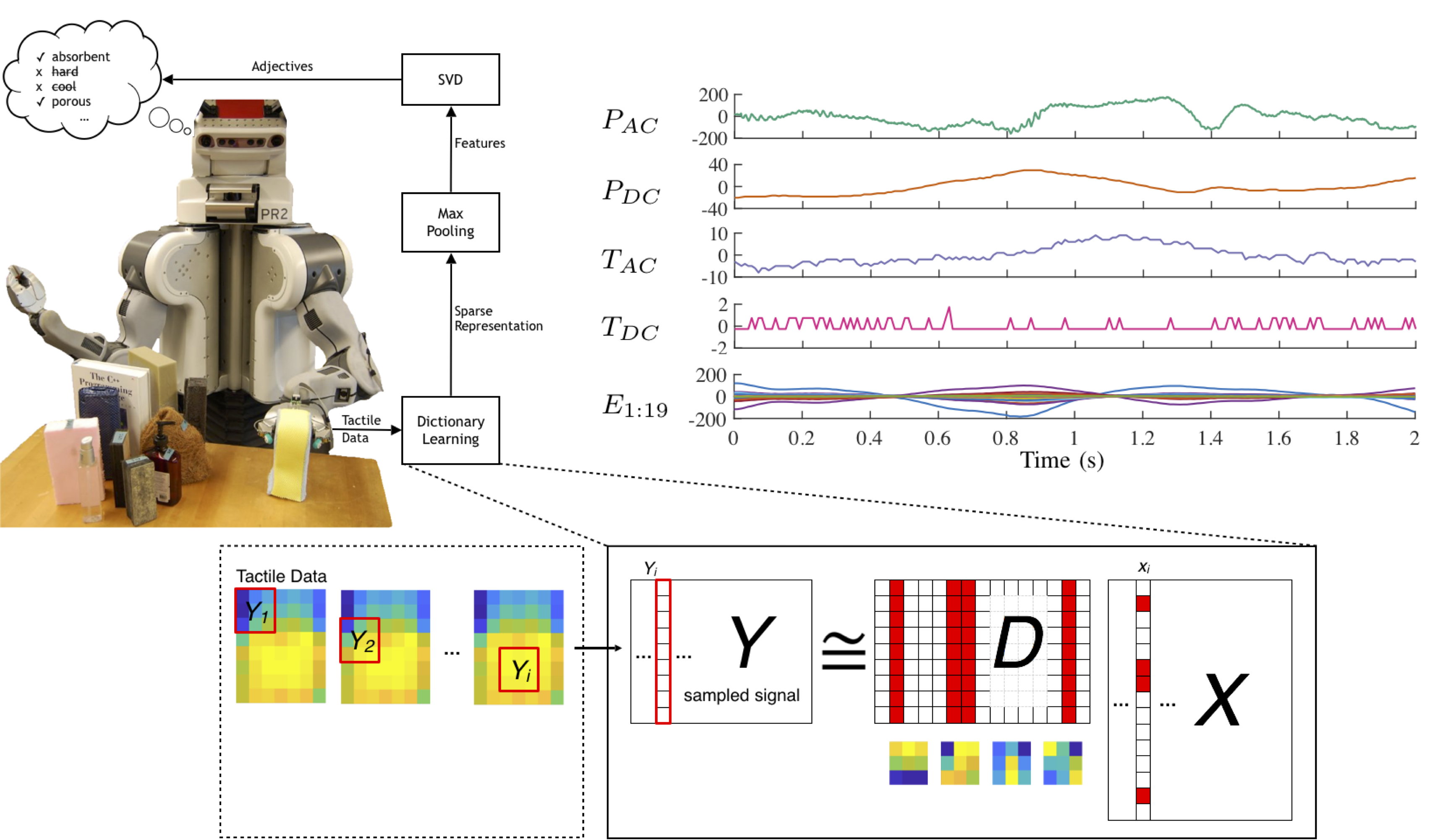

As the robot explores objects, raw tactile data is gathered and partitioned into an observation matrix Y. Unsupervised dictionary learning extracts a dictionary of basis vectors D from the data. Y is reconstructed by sparsely combining elements of D using the sparse representation matrix X. The elements of X are max pooled, and the resulting feature vectors are used in adjective classification.

Humans can form an impression of how a new object feels simply by touching its surfaces with the densely innervated skin of the fingertips. Recent research has focused on endowing robots with similar levels of haptic intelligence, but these efforts are usually limited to specific applications and make strong assumptions about the underlying structure of the haptic signals. By contrast, unsupervised machine learning can discover important underlying structure without biases for specific applications; such representations can then be effectively applied to a variety of learning tasks.

Our work applies unsupervised feature-learning methods to multimodal haptic data (fingertip deformation, pressure, vibration, and temperature) that was previously collected using a Willow Garage PR2 robot equipped with a pair of SynTouch BioTac sensors. The robot used four pre-programmed exploratory procedures (EPs) to touch sixty unique objects, each of which was separately labeled by blindfolded humans using haptic adjectives from a finite set. The raw haptic data and human labels are contained in the Penn Haptic Adjective Corpus (PHAC-2) dataset.

We are particularly interested in how representations derived from this data set depend on EPs and sensory modalities, and how accurately they classify across the set of adjectives. Do representations learned from certain EPs and data streams classify texture-related adjectives better than others? Can variability in performance be predicted by properties of the learned representations? Is it necessary to learn a different representation for each EP, or does enough shared information exist to use a combined representation?

Our work shows that learned features are far superior to hand-crafted features. Additionally, the features learned from certain EPs and sensory modalities provide a better basis for adjective classification than those from others. We are also investigating dependencies between EPs and sensory modalities, relationships between learned representations, and ordinal (rather than binary) adjectives.