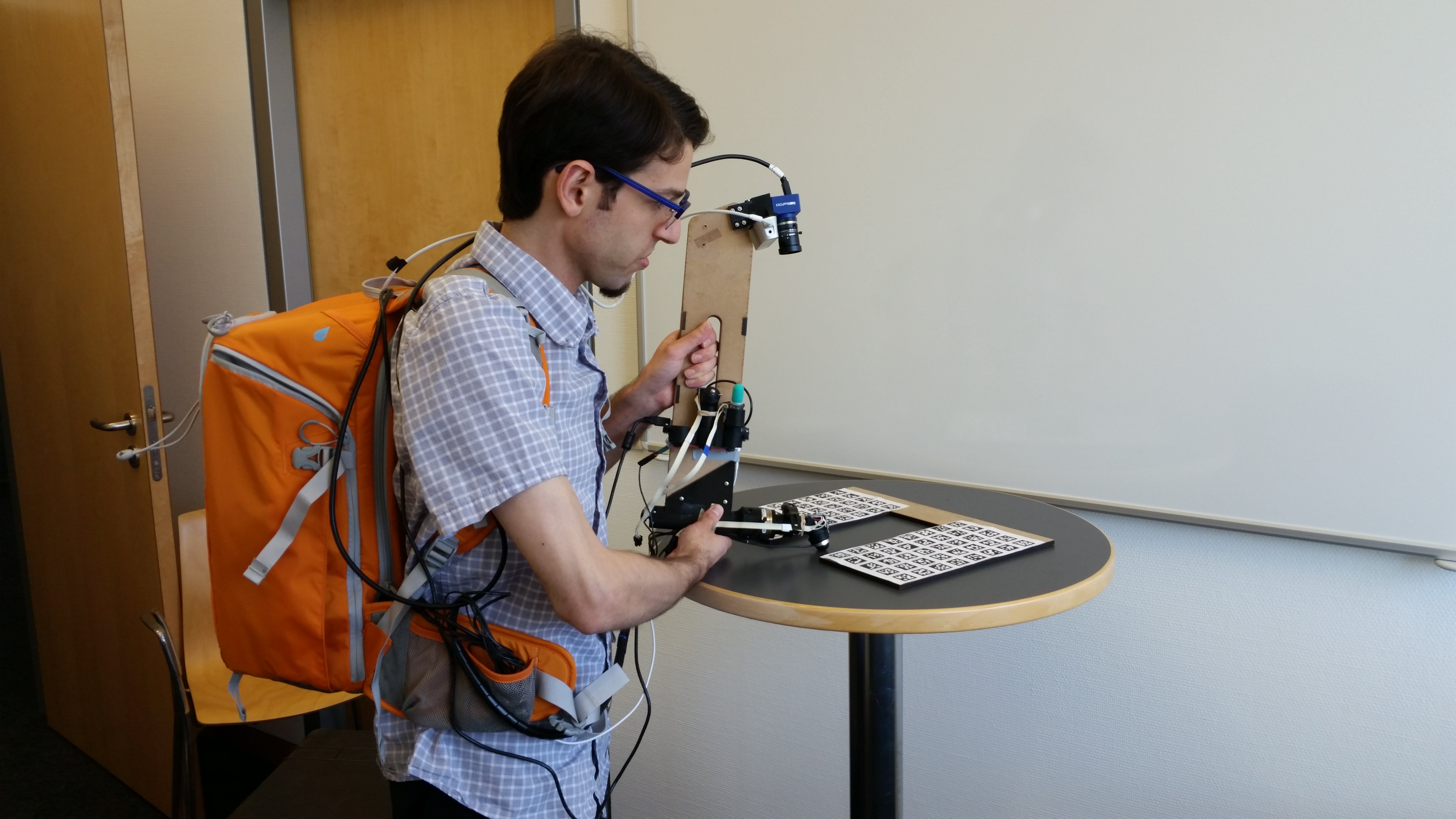

An experimenter operates the Proton Pack, a portable visuo-haptic surface interaction recording device.

Humans draw on their vast life experience of touching things to make inferences about the objects in their environment; this capability enables one to make haptic judgments before actually touching things. For example, it is effortless to select the correct grip force for picking up a delicate object, or to choose a gait that will enable you to safely walk across a slippery patch of ice. Robots struggle to "feel with their eyes" in the same way, and we believe that this problem can be solved through machine learning on a large dataset [ ].

Modern robots are almost always equipped with advanced vision hardware that typically provides 3D data (using a stereo camera or a Kinect-like device). However, multimodal perception is still in development, especially in the area of haptics. Not many robots have haptic sensors, and even when they do, they aren't standardized, and we don't always know how to interpret the data.

In this project, we designed and built the Proton Pack, a portable, self-contained, human-operated visuo-haptic sensing device [ ]. It integrates visual sensors with an interchangeable haptic end-effector. The major parts of the project are as follows:

- Calibration: in order to relate all the sensor readings to each other, we went through several calibration processes to determine the relative camera positions and the mechanical properties of each end-effector [ ].

- Surface classification: to make sure that the data collected with the Proton Pack is relevant to haptic perception, and to validate hardware improvements along the way, we did some proof-of-concept classification tests among small sets of surfaces [ ].

- Data collection: we used the Proton Pack to collect a large dataset of end-effector/surface interactions [ ]. Both the Proton Pack and several sets of material samples traveled across the Atlantic for this project [ ].

- Machine learning: we attempted to train a computer to "feel with its eyes" (that is, predict haptic properties from visual images) using the dataset we collected [ ].

This material is based upon work supported by the U.S. National Science Foundation (NSF) under grant 1426787 as part of the National Robotics Initiative. Alex Burka was also supported by the NSF under the Complex Scene Perception IGERT program, award number 0966142.