2024

Gong, Y.

Engineering and Evaluating Naturalistic Vibrotactile Feedback for Telerobotic Assembly

University of Stuttgart, Stuttgart, Germany, August 2024, Faculty of Design, Production Engineering and Automotive Engineering (phdthesis)

L’Orsa, R., Bisht, A., Yu, L., Murari, K., Westwick, D. T., Sutherland, G. R., Kuchenbecker, K. J.

Reflectance Outperforms Force and Position in Model-Free Needle Puncture Detection

In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, USA, July 2024 (inproceedings) Accepted

Mohan, M., Mat Husin, H., Kuchenbecker, K. J.

Expert Perception of Teleoperated Social Exercise Robots

In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction (HRI), pages: 769-773, Boulder, USA, March 2024, Late-Breaking Report (LBR) (5 pages) presented at the IEEE/ACM International Conference on Human-Robot Interaction (HRI) (inproceedings)

Burns, R.

Creating a Haptic Empathetic Robot Animal That Feels Touch and Emotion

University of Tübingen, Tübingen, Germany, February 2024, Department of Computer Science (phdthesis)

2023

Mohan, M.

Gesture-Based Nonverbal Interaction for Exercise Robots

University of Tübingen, Tübingen, Germany, October 2023, Department of Computer Science (phdthesis)

Allemang–Trivalle, A.

Enhancing Surgical Team Collaboration and Situation Awareness through Multimodal Sensing

In Proceedings of the ACM International Conference on Multimodal Interaction, pages: 716-720, Extended abstract (5 pages) presented at the ACM International Conference on Multimodal Interaction (ICMI) Doctoral Consortium, Paris, France, October 2023 (inproceedings)

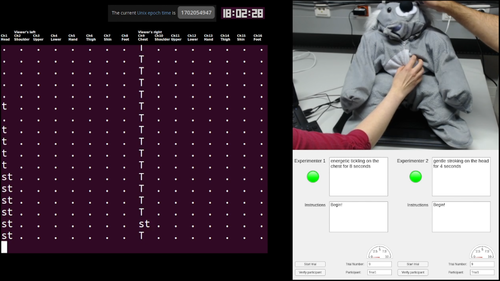

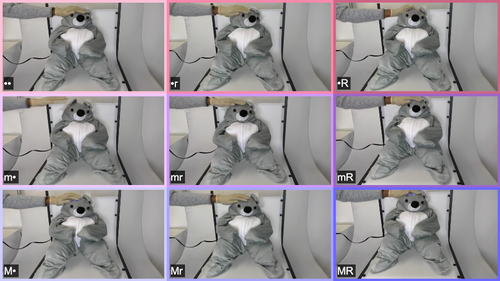

Burns, R. B., Ojo, F., Kuchenbecker, K. J.

Wear Your Heart on Your Sleeve: Users Prefer Robots with Emotional Reactions to Touch and Ambient Moods

In Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), pages: 1914-1921, Busan, South Korea, August 2023 (inproceedings)

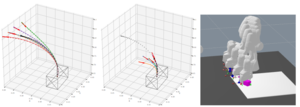

Oh, Y., Passy, J., Mainprice, J.

Augmenting Human Policies using Riemannian Metrics for Human-Robot Shared Control

In Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), pages: 1612-1618, Busan, Korea, August 2023 (inproceedings)

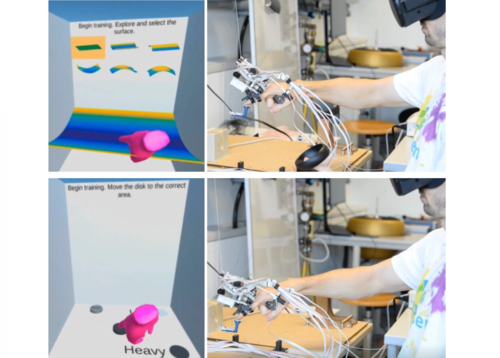

Gong, Y., Javot, B., Lauer, A. P. R., Sawodny, O., Kuchenbecker, K. J.

Naturalistic Vibrotactile Feedback Could Facilitate Telerobotic Assembly on Construction Sites

In Proceedings of the IEEE World Haptics Conference (WHC), pages: 169-175, Delft, The Netherlands, July 2023 (inproceedings)

Schulz, A., Anderson, C. D., Cooper, C., Roberts, D., Loyo, J., Lewis, K., Kumar, S., Rolf, J., Marulanda, N. A. G.

A Toolkit for Expanding Sustainability Engineering Utilizing Foundations of the Engineering for One Planet Initiative

In Proceedings of the American Society of Engineering Education (ASEE), Baltimore, USA, June 2023, Andrew Schulz, Cindy Cooper, Cindy Anderson contributed equally. (inproceedings)

Schulz, A., Stathatos, S., Shriver, C., Moore, R.

Utilizing Online and Open-Source Machine Learning Toolkits to Leverage the Future of Sustainable Engineering

In Proceedings of the American Society of Engineering Education (ASEE), Baltimore, USA, June 2023, Andrew Schulz and Suzanne Stathatos are co-first authors. (inproceedings)

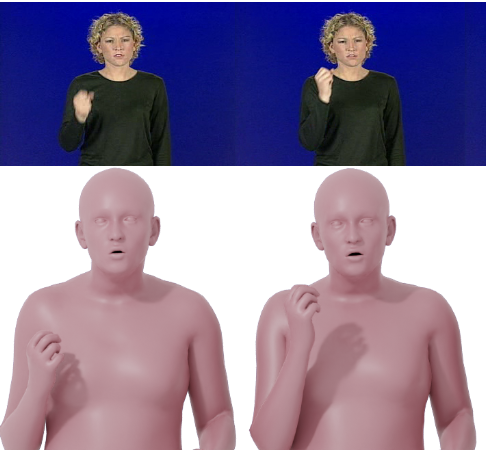

Forte, M., Kulits, P., Huang, C. P., Choutas, V., Tzionas, D., Kuchenbecker, K. J., Black, M. J.

Reconstructing Signing Avatars from Video Using Linguistic Priors

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pages: 12791-12801, CVPR 2023, June 2023 (inproceedings)

2022

Richardson, B.

Multi-Timescale Representation Learning of Human and Robot Haptic Interactions

University of Stuttgart, Stuttgart, Germany, December 2022, Faculty of Computer Science, Electrical Engineering and Information Technology (phdthesis)

Nam, S.

Understanding the Influence of Moisture on Fingerpad-Surface Interactions

University of Tübingen, Tübingen, Germany, October 2022, Department of Computer Science (phdthesis)

L’Orsa, R., Lama, S., Westwick, D., Sutherland, G., Kuchenbecker, K. J.

Towards Semi-Automated Pleural Cavity Access for Pneumothorax in Austere Environments

In Proceedings of the International Astronautical Congress (IAC), pages: 1-7, Paris, France, September 2022 (inproceedings)

Allemang–Trivalle, A., Eden, J., Ivanova, E., Huang, Y., Burdet, E.

How Long Does It Take to Learn Trimanual Coordination?

In Proceedings of the IEEE International Conference on Robot and Human Interactive Communication (RO-MAN) , pages: 211-216, Napoli, Italy, August 2022 (inproceedings)

Allemang–Trivalle, A., Eden, J., Huang, Y., Ivanova, E., Burdet, E.

Comparison of Human Trimanual Performance Between Independent and Dependent Multiple-Limb Training Modes

In Proceedings of the IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob), Seoul, Korea, August 2022 (inproceedings)

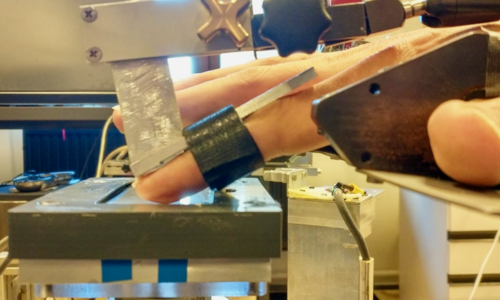

Machaca, S., Cao, E., Chi, A., Adrales, G., Kuchenbecker, K. J., Brown, J. D.

Wrist-Squeezing Force Feedback Improves Accuracy and Speed in Robotic Surgery Training

In Proceedings of the IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob), pages: 700-707 , Seoul, Korea, 9th IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob 2022), August 2022 (inproceedings)

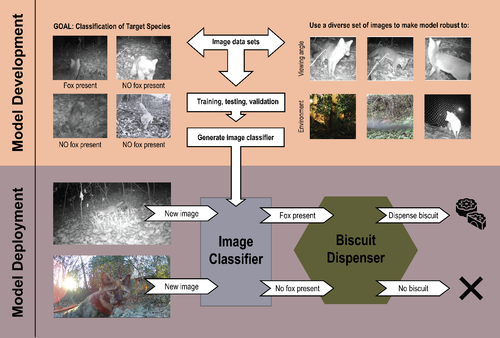

Schulz, A., Shriver, C., Seleb, B., Greiner, C., Hu, D., Moore, R., Zhang, M., Jadali, N., Patka, A.

A Foundational Design Experience in Conservation Technology: A Multi-Disciplinary Approach to meeting Sustainable Development Goals

Proceedings of the American Society of Engineering Education, pages: 1-12, Minneapolis, USA, June 2022, Award for best paper (conference)

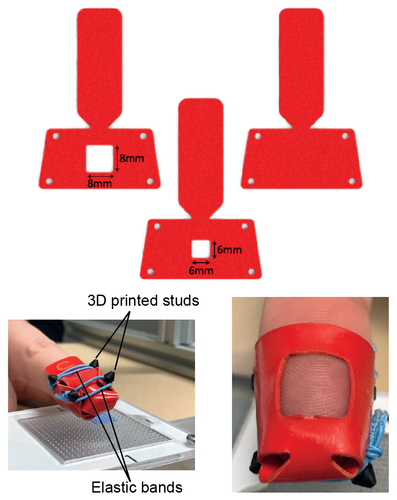

Gueorguiev, D., Javot, B., Spiers, A., Kuchenbecker, K. J.

Larger Skin-Surface Contact Through a Fingertip Wearable Improves Roughness Perception

In Haptics: Science, Technology, Applications, pages: 171-179, Lecture Notes in Computer Science, 13235, (Editors: Seifi, Hasti and Kappers, Astrid M. L. and Schneider, Oliver and Drewing, Knut and Pacchierotti, Claudio and Abbasimoshaei, Alireza and Huisman, Gijs and Kern, Thorsten A.), Springer, Cham, 13th International Conference on Human Haptic Sensing and Touch Enabled Computer Applications (EuroHaptics 2022), May 2022 (inproceedings)

Schulz, A., Seleb, B., Shriver, C., Hu, D., Moore, R.

Toward The Un’s Sustainable Development Goals (sdgs): Conservation Technology For Design Teaching & Learning

pages: 1-9, Charlotte, USA, March 2022 (conference)

Ortenzi, V., Filipovica, M., Abdlkarim, D., Pardi, T., Takahashi, C., Wing, A. M., Luca, M. D., Kuchenbecker, K. J.

Robot, Pass Me the Tool: Handle Visibility Facilitates Task-Oriented Handovers

In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction (HRI), pages: 256-264, March 2022, Valerio Ortenzi and Maija Filipovica contributed equally to this publication. (inproceedings)

2021

Thomas, N., Fazlollahi, F., Brown, J. D., Kuchenbecker, K. J.

Sensorimotor-Inspired Tactile Feedback and Control Improve Consistency of Prosthesis Manipulation in the Absence of Direct Vision

In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages: 6174-6181, Prague, Czech Republic, September 2021 (inproceedings)

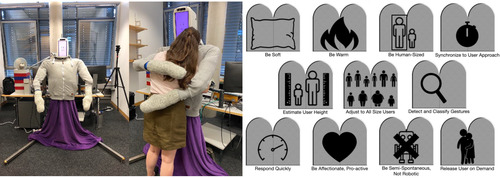

Block, A. E.

HuggieBot: An Interactive Hugging Robot With Visual and Haptic Perception

ETH Zürich, Zürich, August 2021, Department of Computer Science (phdthesis)

Pardi, T., E., A. G., Ortenzi, V., Stolkin, R.

Optimal Grasp Selection, and Control for Stabilising a Grasped Object, with Respect to Slippage and External Forces

In Proceedings of the IEEE-RAS International Conference on Humanoid Robots (Humanoids 2020), pages: 429-436, Munich, Germany, July 2021 (inproceedings)

Abdlkarim, D., Ortenzi, V., Pardi, T., Filipovica, M., Wing, A. M., Kuchenbecker, K. J., Di Luca, M.

PrendoSim: Proxy-Hand-Based Robot Grasp Generator

In ICINCO 2021: Proceedings of the International Conference on Informatics in Control, Automation and Robotics, pages: 60-68, (Editors: Gusikhin, Oleg and Nijmeijer, Henk and Madani, Kurosh), SciTePress, Sétubal, 18th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2021), July 2021 (inproceedings)

Young, E. M., Kuchenbecker, K. J.

Ungrounded Vari-Dimensional Tactile Fingertip Feedback for Virtual Object Interaction

In CHI ’21: Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, pages: 217, ACM, New York, NY, Conference on Human Factors in Computing Systems (CHI 2021), May 2021 (inproceedings)

Mohan, M., Nunez, C. M., Kuchenbecker, K. J.

Robot Interaction Studio: A Platform for Unsupervised HRI

In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Xian, China, May 2021 (inproceedings)

Krauthausen, F.

Robotic Surgery Training in AR: Multimodal Record and Replay

pages: 1-147, University of Stuttgart, Stuttgart, May 2021, Study Program in Software Engineering (mastersthesis)

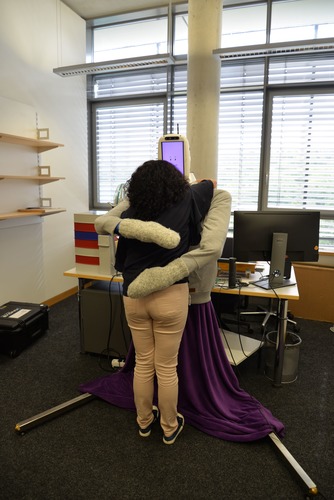

Block, A. E., Christen, S., Gassert, R., Hilliges, O., Kuchenbecker, K. J.

The Six Hug Commandments: Design and Evaluation of a Human-Sized Hugging Robot with Visual and Haptic Perception

In HRI ’21: Proceedings of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, pages: 380-388, ACM, New York, NY, USA, ACM/IEEE International Conference on Human-Robot Interaction (HRI 2021), March 2021 (inproceedings)

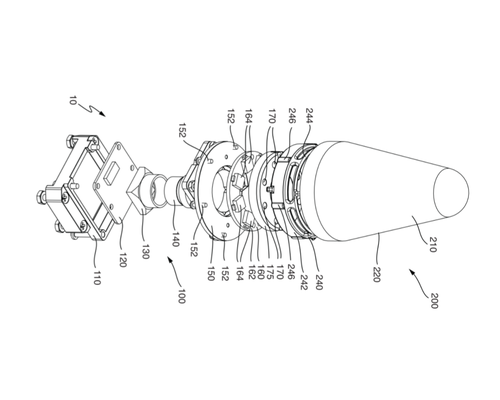

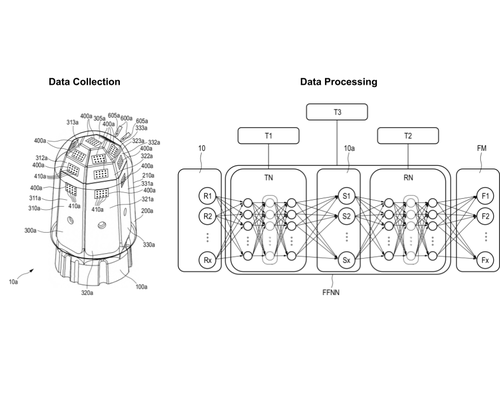

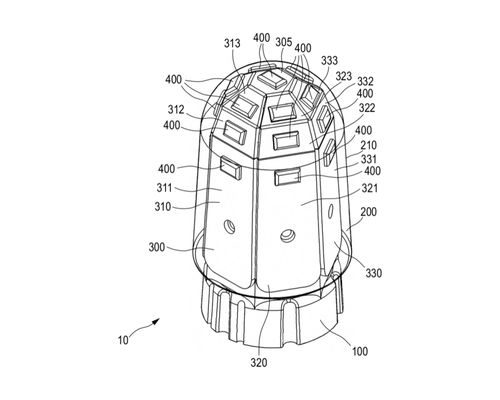

Sun, H., Martius, G., Kuchenbecker, K. J.

Sensor Arrangement for Sensing Forces and Methods for Fabricating a Sensor Arrangement and Parts Thereof

(PCT/EP2021/050230), Max Planck Institute for Intelligent Systems, Max Planck Ring 4, January 2021 (patent)

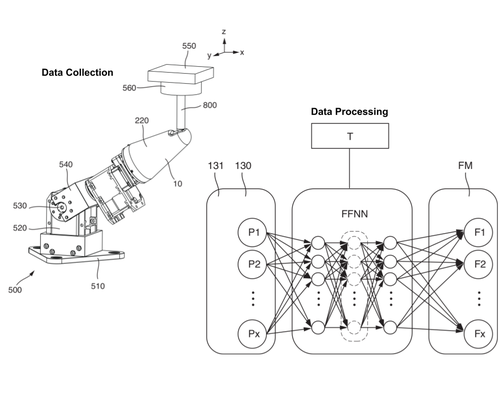

Sun, H., Martius, G., Kuchenbecker, K. J.

Method for force inference, method for training a feed-forward neural network, force inference module, and sensor arrangement

(PCT/EP2021/050231), Max Planck Institute for Intelligent Systems, Max Planck Ring 4, January 2021 (patent)

2020

Young, E. M.

Delivering Expressive and Personalized Fingertip Tactile Cues

University of Pennsylvania, Philadelphia, PA, December 2020, Department of Mechanical Engineering and Applied Mechanics (phdthesis)

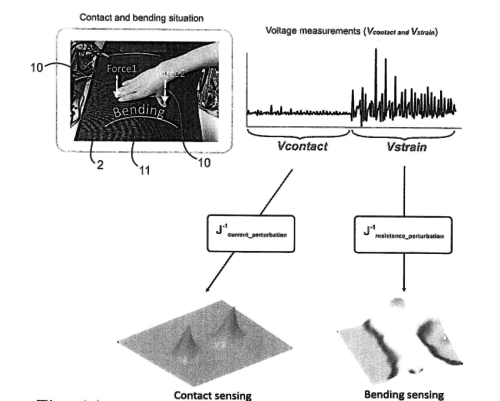

Lee, H., Kuchenbecker, K. J.

System and Method for Simultaneously Sensing Contact Force and Lateral Strain

(EP20000480.2), December 2020 (patent)

Fitter, N. T., Kuchenbecker, K. J.

Synchronicity Trumps Mischief in Rhythmic Human-Robot Social-Physical Interaction

In Robotics Research, 10, pages: 269-284, Springer Proceedings in Advanced Robotics, (Editors: Amato, Nancy M. and Hager, Greg and Thomas, Shawna and Torres-Torriti, Miguel), Springer, Cham, 18th International Symposium on Robotics Research (ISRR), 2020 (inproceedings)

Sun, H., Martius, G., Lee, H., Spiers, A., Fiene, J.

Method for Force Inference of a Sensor Arrangement, Methods for Training Networks, Force Inference Module and Sensor Arrangement

(PCT/EP2020/083261), Max Planck Institute for Intelligent Systems, Max Planck Ring 4, November 2020 (patent)

Spiers, A., Sun, H., Lee, H., Martius, G., Fiene, J., Seo, W. H.

Sensor Arrangement for Sensing Forces and Method for Farbricating a Sensor Arrangement

(PCT/EP2020/083260), November 2020 (patent)

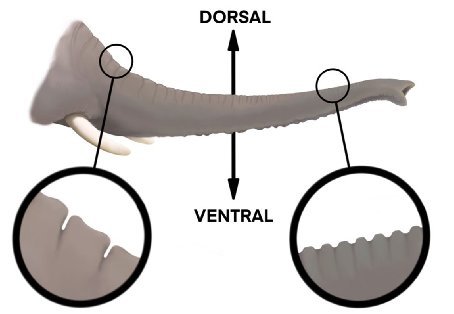

Schulz, A., Fourney, E., Sordilla, S., Sukhwani, A., Hu, D.

Elephant Trunk Skin: Nature’s Flexible Kevlar

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, October 2020 (conference)

Hu, S.

Modulating Physical Interactions in Human-Assistive Technologies

University of Pennsylvania, Philadelphia, PA, August 2020, Department of Mechanical Engineering and Applied Mechanics (phdthesis)

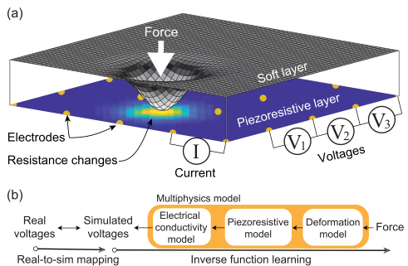

Lee, H., Park, H., Serhat, G., Sun, H., Kuchenbecker, K. J.

Calibrating a Soft ERT-Based Tactile Sensor with a Multiphysics Model and Sim-to-real Transfer Learning

In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), pages: 1632-1638, IEEE International Conference on Robotics and Automation (ICRA 2020), May 2020 (inproceedings)

Park, K., Park, H., Lee, H., Park, S., Kim, J.

An ERT-Based Robotic Skin with Sparsely Distributed Electrodes: Structure, Fabrication, and DNN-Based Signal Processing

In 2020 IEEE International Conference on Robotics and Automation (ICRA 2020), pages: 1617-1624, IEEE, Piscataway, NJ, IEEE International Conference on Robotics and Automation (ICRA 2020), May 2020 (inproceedings)

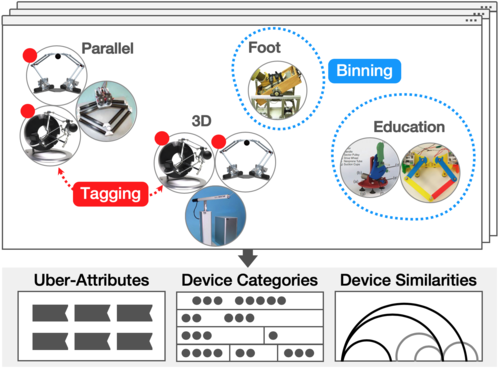

Seifi, H., Oppermann, M., Bullard, J., MacLean, K. E., Kuchenbecker, K. J.

Capturing Experts’ Mental Models to Organize a Collection of Haptic Devices: Affordances Outweigh Attributes

In Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pages: 268, Conference on Human Factors in Computing Systems (CHI 2020), April 2020 (inproceedings)

Gueorguiev, D., Lambert, J., Thonnard, J., Kuchenbecker, K. J.

Changes in Normal Force During Passive Dynamic Touch: Contact Mechanics and Perception

In Proceedings of the IEEE Haptics Symposium (HAPTICS), pages: 746-752, IEEE Haptics Symposium (HAPTICS 2020), March 2020 (inproceedings)

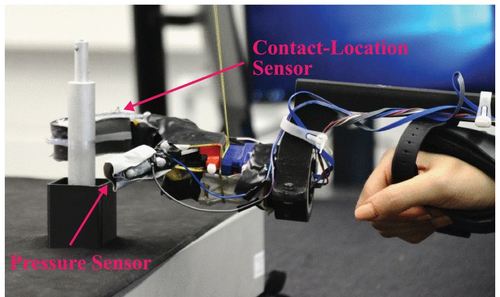

Mohtasham, D., Narayanan, G., Calli, B., Spiers, A. J.

Haptic Object Parameter Estimation during Within-Hand-Manipulation with a Simple Robot Gripper

In Proceedings of the IEEE Haptics Symposium (HAPTICS), pages: 140-147, March 2020 (inproceedings)

2019

Park, H., Lee, H., Park, K., Mo, S., Kim, J.

Deep Neural Network Approach in Electrical Impedance Tomography-Based Real-Time Soft Tactile Sensor

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), (999):7447-7452, IEEE, 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), November 2019 (conference)

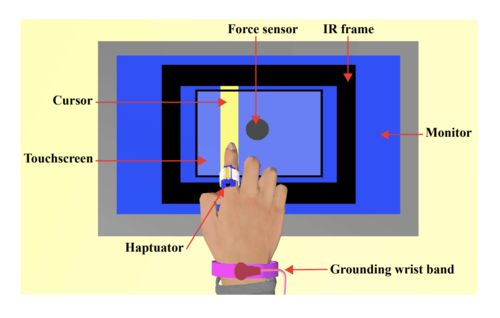

Jamalzadeh, M., Güçlü, B., Vardar, Y., Basdogan, C.

Effect of Remote Masking on Detection of Electrovibration

In Proceedings of the IEEE World Haptics Conference (WHC), pages: 229-234, Tokyo, Japan, July 2019 (inproceedings)

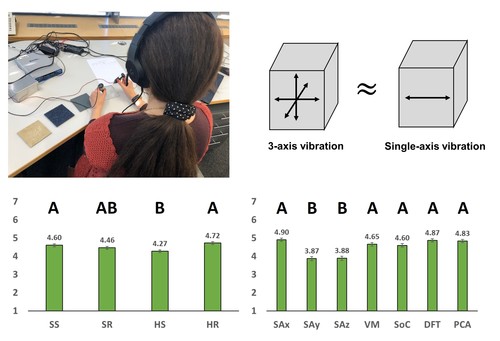

Park, G., Kuchenbecker, K. J.

Objective and Subjective Assessment of Algorithms for Reducing Three-Axis Vibrations to One-Axis Vibrations

In Proceedings of the IEEE World Haptics Conference, pages: 467-472, July 2019 (inproceedings)

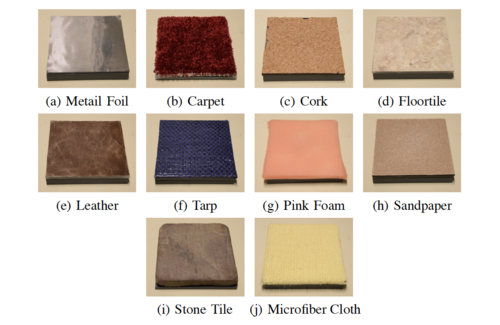

Vardar, Y., Wallraven, C., Kuchenbecker, K. J.

Fingertip Interaction Metrics Correlate with Visual and Haptic Perception of Real Surfaces

In Proceedings of the IEEE World Haptics Conference (WHC), pages: 395-400, Tokyo, Japan, July 2019 (inproceedings)

Gloumakov, Y., Spiers, A. J., Dollar, A. M.

A Clustering Approach to Categorizing 7 Degree-of-Freedom Arm Motions during Activities of Daily Living

In Proceedings of the International Conference on Robotics and Automation (ICRA), pages: 7214-7220, Montreal, Canada, May 2019 (inproceedings)