2024

Cao, C. G. L., Javot, B., Bhattarai, S., Bierig, K., Oreshnikov, I., Volchkov, V. V.

Fiber-Optic Shape Sensing Using Neural Networks Operating on Multispecklegrams

IEEE Sensors Journal, 24(17):27532-27540, September 2024 (article)

Sanchez-Tamayo, N., Yoder, Z., Rothemund, P., Ballardini, G., Keplinger, C., Kuchenbecker, K. J.

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Advanced Science, (2402461):1-14, September 2024 (article)

Tashiro, N., Faulkner, R., Melnyk, S., Rodriguez, T. R., Javot, B., Tahouni, Y., Cheng, T., Wood, D., Menges, A., Kuchenbecker, K. J.

Building Instructions You Can Feel: Edge-Changing Haptic Devices for Digitally Guided Construction

ACM Transactions on Computer-Human Interaction, September 2024 (article) Accepted

Sharon, Y., Nevo, T., Naftalovich, D., Bahar, L., Refaely, Y., Nisky, I.

Augmenting Robot-Assisted Pattern Cutting With Periodic Perturbations – Can We Make Dry Lab Training More Realistic?

IEEE Transactions on Biomedical Engineering, August 2024 (article)

Khojasteh, B., Solowjow, F., Trimpe, S., Kuchenbecker, K. J.

Multimodal Multi-User Surface Recognition with the Kernel Two-Sample Test

IEEE Transactions on Automation Science and Engineering, 21(3):4432-4447, July 2024 (article)

Serhat, G., Kuchenbecker, K. J.

Fingertip Dynamic Response Simulated Across Excitation Points and Frequencies

Biomechanics and Modeling in Mechanobiology, 23, pages: 1369-1376, May 2024 (article)

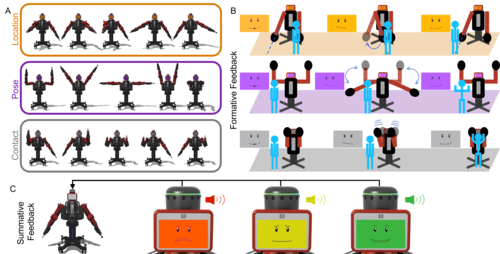

Mohan, M., Nunez, C. M., Kuchenbecker, K. J.

Closing the Loop in Minimally Supervised Human-Robot Interaction: Formative and Summative Feedback

Scientific Reports, 14(10564):1-18, May 2024 (article)

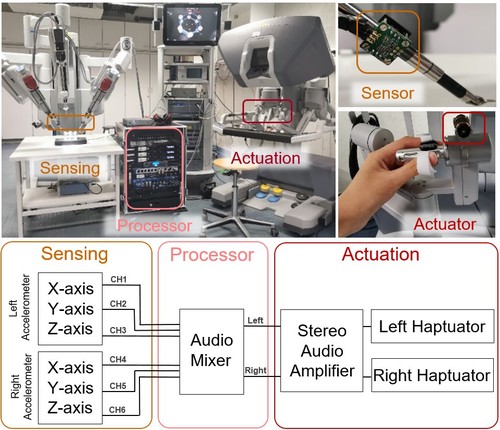

Gong, Y., Mat Husin, H., Erol, E., Ortenzi, V., Kuchenbecker, K. J.

AiroTouch: Enhancing Telerobotic Assembly through Naturalistic Haptic Feedback of Tool Vibrations

Frontiers in Robotics and AI, 11(1355205):1-15, May 2024 (article)

Allemang–Trivalle, A., Donjat, J., Bechu, G., Coppin, G., Chollet, M., Klaproth, O. W., Mitschke, A., Schirrmann, A., Cao, C. G. L.

Modeling Fatigue in Manual and Robot-Assisted Work for Operator 5.0

IISE Transactions on Occupational Ergonomics and Human Factors, 12(1-2):135-147, March 2024 (article)

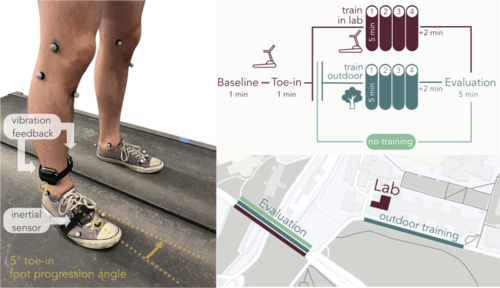

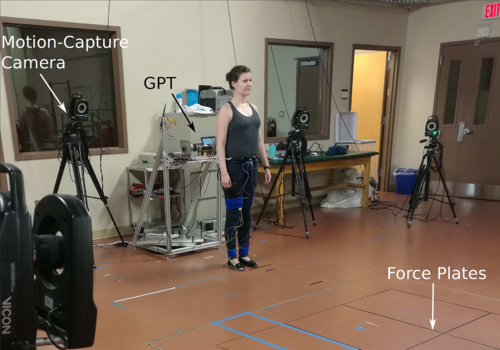

Rokhmanova, N., Pearl, O., Kuchenbecker, K. J., Halilaj, E.

IMU-Based Kinematics Estimation Accuracy Affects Gait Retraining Using Vibrotactile Cues

IEEE Transactions on Neural Systems and Rehabilitation Engineering, 32, pages: 1005-1012, February 2024 (article)

Fitter, N. T., Mohan, M., Preston, R. C., Johnson, M. J., Kuchenbecker, K. J.

How Should Robots Exercise with People? Robot-Mediated Exergames Win with Music, Social Analogues, and Gameplay Clarity

Frontiers in Robotics and AI, 10(1155837):1-18, January 2024 (article)

Khojasteh, B., Shao, Y., Kuchenbecker, K. J.

Robust Surface Recognition with the Maximum Mean Discrepancy: Degrading Haptic-Auditory Signals through Bandwidth and Noise

IEEE Transactions on Haptics, 17(1):58-65, January 2024, Presented at the IEEE Haptics Symposium (article)

2023

L’Orsa, R., Lama, S., Westwick, D., Sutherland, G., Kuchenbecker, K. J.

Towards Semi-Automated Pleural Cavity Access for Pneumothorax in Austere Environments

Acta Astronautica, 212, pages: 48-53, November 2023 (article)

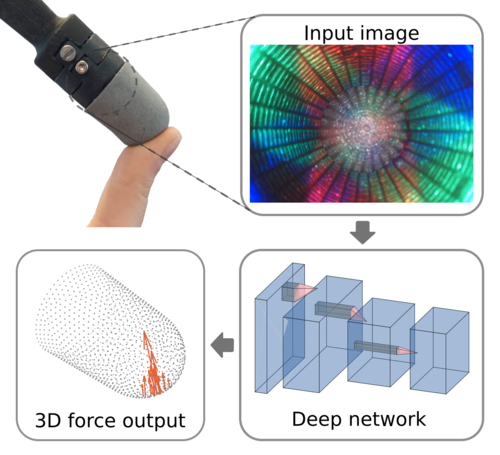

Andrussow, I., Sun, H., Kuchenbecker, K. J., Martius, G.

Minsight: A Fingertip-Sized Vision-Based Tactile Sensor for Robotic Manipulation

Advanced Intelligent Systems, 5(8), August 2023, Inside back cover (article)

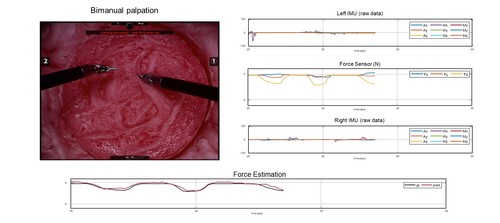

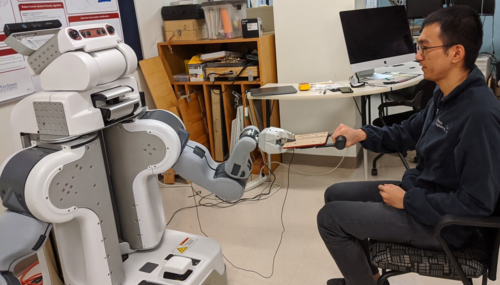

Lee, Y., Husin, H. M., Forte, M., Lee, S., Kuchenbecker, K. J.

Learning to Estimate Palpation Forces in Robotic Surgery From Visual-Inertial Data

IEEE Transactions on Medical Robotics and Bionics, 5(3):496-506, August 2023 (article)

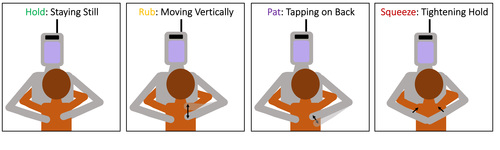

Block, A. E., Seifi, H., Hilliges, O., Gassert, R., Kuchenbecker, K. J.

In the Arms of a Robot: Designing Autonomous Hugging Robots with Intra-Hug Gestures

ACM Transactions on Human-Robot Interaction, 12(2):1-49, June 2023, Special Issue on Designing the Robot Body: Critical Perspectives on Affective Embodied Interaction (article)

Gertler, I., Serhat, G., Kuchenbecker, K. J.

Generating Clear Vibrotactile Cues with a Magnet Embedded in a Soft Finger Sheath

Soft Robotics, 10(3):624-635, June 2023 (article)

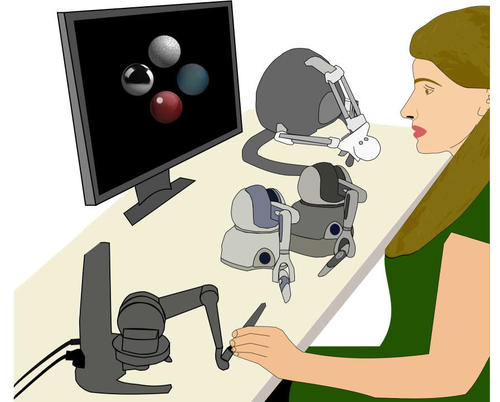

Fazlollahi, F., Kuchenbecker, K. J.

Haptify: A Measurement-Based Benchmarking System for Grounded Force-Feedback Devices

IEEE Transactions on Robotics, 39(2):1622-1636, April 2023 (article)

Brown, J. D., Kuchenbecker, K. J.

Effects of Automated Skill Assessment on Robotic Surgery Training

The International Journal of Medical Robotics and Computer Assisted Surgery, 19(2):e2492, April 2023 (article)

Spiers, A. J., Young, E., Kuchenbecker, K. J.

The S-BAN: Insights into the Perception of Shape-Changing Haptic Interfaces via Virtual Pedestrian Navigation

ACM Transactions on Computer-Human Interaction, 30(1):1-31, March 2023 (article)

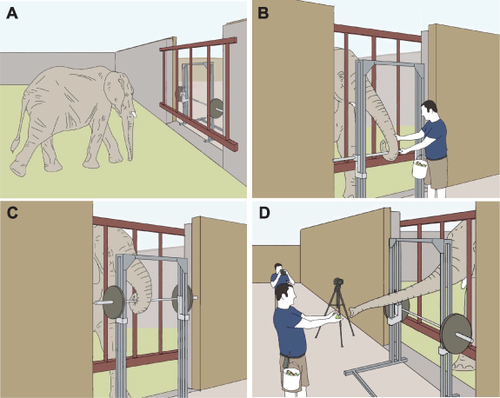

Schulz, A., Reidenberg, J., Wu, J. N., Tang, C. Y., Seleb, B., Mancebo, J., Elgart, N., Hu, D.

Elephant trunks use an adaptable prehensile grip

Bioinspiration and Biomimetics, 18(2), February 2023 (article)

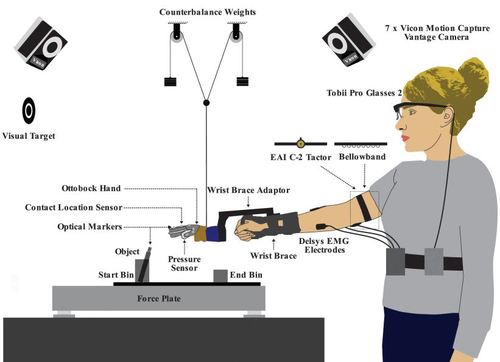

Thomas, N., Fazlollahi, F., Kuchenbecker, K. J., Brown, J. D.

The Utility of Synthetic Reflexes and Haptic Feedback for Upper-Limb Prostheses in a Dexterous Task Without Direct Vision

IEEE Transactions on Neural Systems and Rehabilitation Engineering, 31, pages: 169-179, January 2023 (article)

Lee, H., Sun, H., Park, H., Serhat, G., Javot, B., Martius, G., Kuchenbecker, K. J.

Predicting the Force Map of an ERT-Based Tactile Sensor Using Simulation and Deep Networks

IEEE Transactions on Automation Science and Engineering, 20(1):425-439, January 2023 (article)

2022

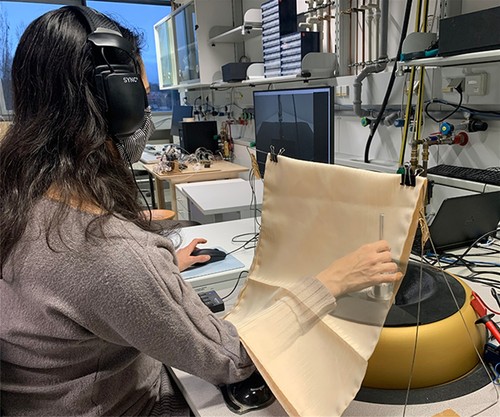

Richardson, B. A., Vardar, Y., Wallraven, C., Kuchenbecker, K. J.

Learning to Feel Textures: Predicting Perceptual Similarities from Unconstrained Finger-Surface Interactions

IEEE Transactions on Haptics, 15(4):705-717, October 2022, Benjamin A. Richardson and Yasemin Vardar contributed equally to this publication. (article)

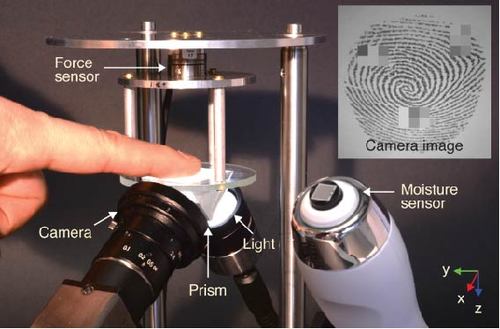

Serhat, G., Vardar, Y., Kuchenbecker, K. J.

Contact Evolution of Dry and Hydrated Fingertips at Initial Touch

PLOS ONE, 17(7):e0269722, July 2022, Gokhan Serhat and Yasemin Vardar contributed equally to this publication. (article)

Lee, H., Tombak, G. I., Park, G., Kuchenbecker, K. J.

Perceptual Space of Algorithms for Three-to-One Dimensional Reduction of Realistic Vibrations

IEEE Transactions on Haptics, 15(3):521-534, July 2022 (article)

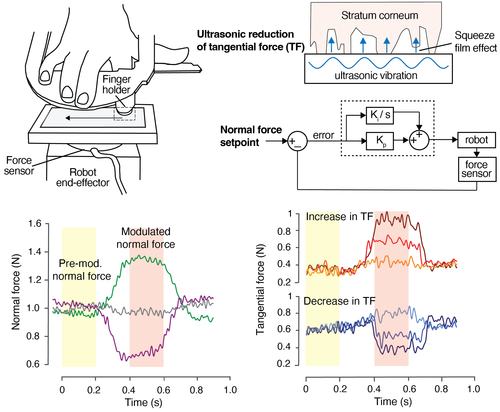

Gueorguiev, D., Lambert, J., Thonnard, J., Kuchenbecker, K. J.

Normal and Tangential Forces Combine to Convey Contact Pressure During Dynamic Tactile Stimulation

Scientific Reports, 12, pages: 8215, May 2022 (article)

Rokhmanova, N., Kuchenbecker, K. J., Shull, P. B., Ferber, R., Halilaj, E.

Predicting Knee Adduction Moment Response to Gait Retraining with Minimal Clinical Data

PLOS Computational Biology, 18(5):e1009500, May 2022 (article)

Forte, M., Gourishetti, R., Javot, B., Engler, T., Gomez, E. D., Kuchenbecker, K. J.

Design of Interactive Augmented Reality Functions for Robotic Surgery and Evaluation in Dry-Lab Lymphadenectomy

The International Journal of Medical Robotics and Computer Assisted Surgery, 18(2):e2351, April 2022 (article)

Burns, R. B., Lee, H., Seifi, H., Faulkner, R., Kuchenbecker, K. J.

Endowing a NAO Robot with Practical Social-Touch Perception

Frontiers in Robotics and AI, 9, pages: 840335, April 2022 (article)

Park, K., Lee, H., Kuchenbecker, K. J., Kim, J.

Adaptive Optimal Measurement Algorithm for ERT-Based Large-Area Tactile Sensors

IEEE/ASME Transactions on Mechatronics, 27(1):304-314, February 2022 (article)

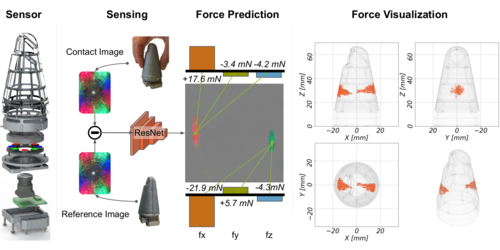

Sun, H., Kuchenbecker, K. J., Martius, G.

A Soft Thumb-Sized Vision-Based Sensor with Accurate All-Round Force Perception

Nature Machine Intelligence, 4(2):135-145, February 2022 (article)

Gourishetti, R., Kuchenbecker, K. J.

Evaluation of Vibrotactile Output from a Rotating Motor Actuator

IEEE Transactions on Haptics, 15(1):39-44, January 2022, Presented at the IEEE Haptics Symposium (article)

2021

Ambron, E., Buxbaum, L. J., Miller, A., Stoll, H., Kuchenbecker, K. J., Coslett, H. B.

Virtual Reality Treatment Displaying the Missing Leg Improves Phantom Limb Pain: A Small Clinical Trial

Neurorehabilitation and Neural Repair, 35(12):1100-1111, December 2021 (article)

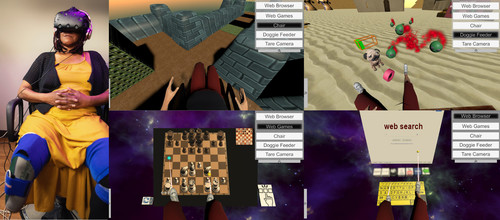

Hu, S., Mendonca, R., Johnson, M. J., Kuchenbecker, K. J.

Robotics for Occupational Therapy: Learning Upper-Limb Exercises From Demonstrations

IEEE Robotics and Automation Letters, 6(4):7781-7788, October 2021 (article)

Hu, S., Fjeld, K., Vasudevan, E. V., Kuchenbecker, K. J.

A Brake-Based Overground Gait Rehabilitation Device for Altering Propulsion Impulse Symmetry

Sensors, 21(19):6617, October 2021 (article)

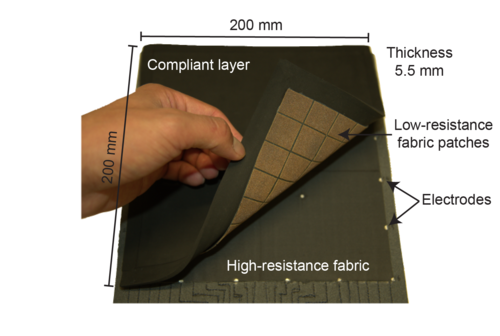

Lee, H., Park, K., Kim, J., Kuchenbecker, K. J.

Piezoresistive Textile Layer and Distributed Electrode Structure for Soft Whole-Body Tactile Skin

Smart Materials and Structures, 30(8):085036, July 2021, Hyosang Lee and Kyungseo Park contributed equally to this publication. (article)

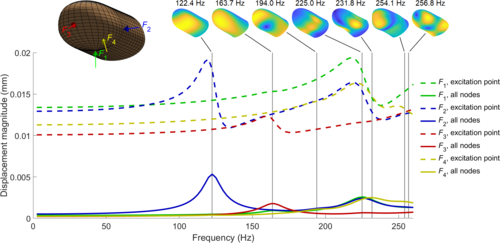

Serhat, G., Kuchenbecker, K. J.

Free and Forced Vibration Modes of the Human Fingertip

Applied Sciences, 11(12):5709, June 2021 (article)

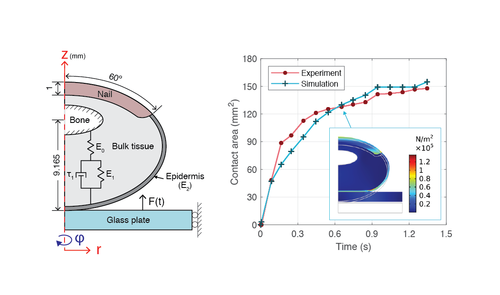

Nam, S., Kuchenbecker, K. J.

Optimizing a Viscoelastic Finite Element Model to Represent the Dry, Natural, and Moist Human Finger Pressing on Glass

IEEE Transactions on Haptics, 14(2):303-309, IEEE, May 2021, Presented at the IEEE World Haptics Conference (WHC) (article)

Caccianiga, G., Mariani, A., Galli de Paratesi, C., Menciassi, A., De Momi, E.

Multi-Sensory Guidance and Feedback for Simulation-Based Training in Robot Assisted Surgery: A Preliminary Comparison of Visual, Haptic, and Visuo-Haptic

IEEE Robotics and Automation Letters, 6(2):3801-3808, April 2021 (article)

Vardar, Y., Kuchenbecker, K. J.

Finger Motion and Contact by a Second Finger Influence the Tactile Perception of Electrovibration

Journal of the Royal Society Interface, 18(176):20200783, March 2021 (article)

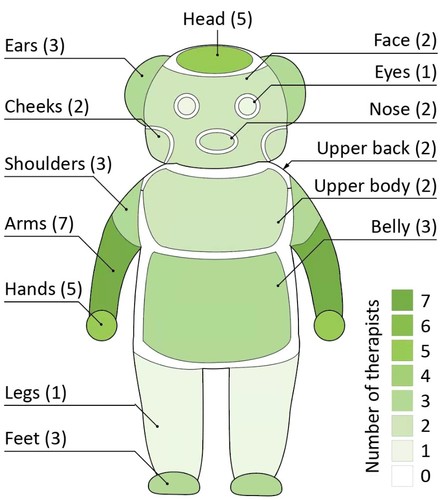

Burns, R. B., Seifi, H., Lee, H., Kuchenbecker, K. J.

Getting in Touch with Children with Autism: Specialist Guidelines for a Touch-Perceiving Robot

Paladyn. Journal of Behavioral Robotics, 12(1):115-135, January 2021 (article)

2020

Spiers, A. J., Morgan, A. S., Srinivasan, K., Calli, B., Dollar, A. M.

Using a Variable-Friction Robot Hand to Determine Proprioceptive Features for Object Classification During Within-Hand-Manipulation

IEEE Transactions on Haptics, 13(3):600-610, July 2020 (article)

Nam, S., Vardar, Y., Gueorguiev, D., Kuchenbecker, K. J.

Physical Variables Underlying Tactile Stickiness during Fingerpad Detachment

Frontiers in Neuroscience, 14, pages: 235, April 2020 (article)

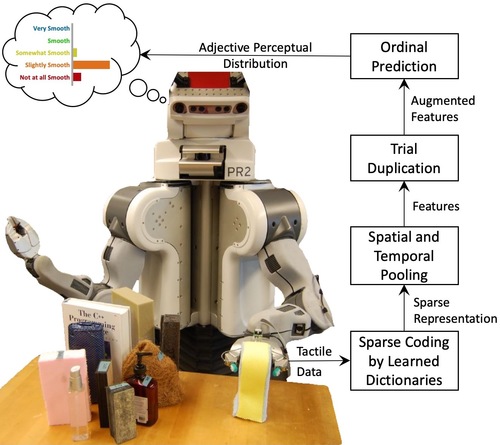

Richardson, B. A., Kuchenbecker, K. J.

Learning to Predict Perceptual Distributions of Haptic Adjectives

Frontiers in Neurorobotics, 13, pages: 1-16, February 2020 (article)

Fitter, N. T., Mohan, M., Kuchenbecker, K. J., Johnson, M. J.

Exercising with Baxter: Preliminary Support for Assistive Social-Physical Human-Robot Interaction

Journal of NeuroEngineering and Rehabilitation, 17, pages: 1-22, February 2020 (article)

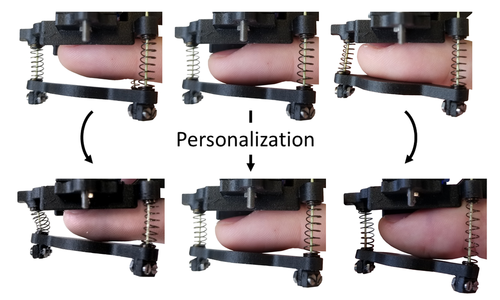

Young, E. M., Gueorguiev, D., Kuchenbecker, K. J., Pacchierotti, C.

Compensating for Fingertip Size to Render Tactile Cues More Accurately

IEEE Transactions on Haptics, 13(1):144-151, January 2020, Katherine J. Kuchenbecker and Claudio Pacchierotti contributed equally to this publication. Presented at the IEEE World Haptics Conference (WHC). (article)

2019

Hu, S., Kuchenbecker, K. J.

Hierarchical Task-Parameterized Learning from Demonstration for Collaborative Object Movement

Applied Bionics and Biomechanics, (9765383), December 2019 (article)

Park, K., Kim, S., Lee, H., Park, I., Kim, J.

Low-Hysteresis and Low-Interference Soft Tactile Sensor Using a Conductive Coated Porous Elastomer and a Structure for Interference Reduction

Sensors and Actuators A: Physical, 295, pages: 541-550, August 2019 (article)

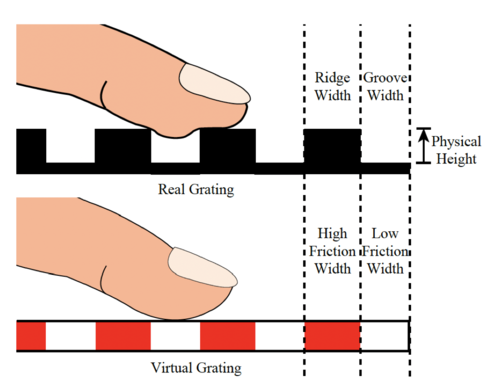

Isleyen, A., Vardar, Y., Basdogan, C.

Tactile Roughness Perception of Virtual Gratings by Electrovibration

IEEE Transactions on Haptics, 13(3):562-570, July 2019 (article)