2024

Cao, C. G. L., Javot, B., Bhattarai, S., Bierig, K., Oreshnikov, I., Volchkov, V. V.

Fiber-Optic Shape Sensing Using Neural Networks Operating on Multispecklegrams

IEEE Sensors Journal, 24(17):27532-27540, September 2024 (article)

Sanchez-Tamayo, N., Yoder, Z., Rothemund, P., Ballardini, G., Keplinger, C., Kuchenbecker, K. J.

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Advanced Science, (2402461):1-14, September 2024 (article)

Tashiro, N., Faulkner, R., Melnyk, S., Rodriguez, T. R., Javot, B., Tahouni, Y., Cheng, T., Wood, D., Menges, A., Kuchenbecker, K. J.

Building Instructions You Can Feel: Edge-Changing Haptic Devices for Digitally Guided Construction

ACM Transactions on Computer-Human Interaction, September 2024 (article) Accepted

Khojasteh, B., Solowjow, F., Trimpe, S., Kuchenbecker, K. J.

Multimodal Multi-User Surface Recognition with the Kernel Two-Sample Test

IEEE Transactions on Automation Science and Engineering, 21(3):4432-4447, July 2024 (article)

L’Orsa, R., Bisht, A., Yu, L., Murari, K., Westwick, D. T., Sutherland, G. R., Kuchenbecker, K. J.

Reflectance Outperforms Force and Position in Model-Free Needle Puncture Detection

In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, USA, July 2024 (inproceedings) Accepted

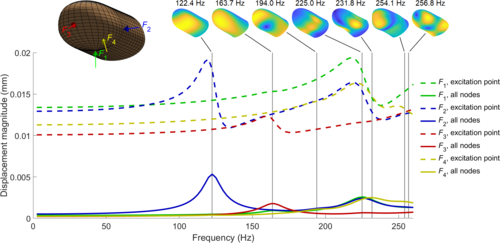

Serhat, G., Kuchenbecker, K. J.

Fingertip Dynamic Response Simulated Across Excitation Points and Frequencies

Biomechanics and Modeling in Mechanobiology, 23, pages: 1369-1376, May 2024 (article)

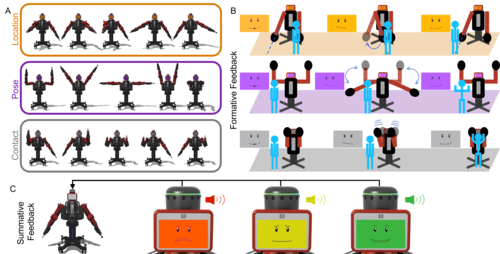

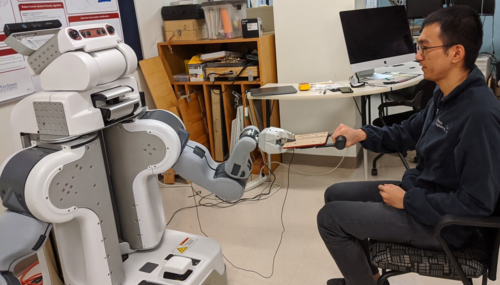

Mohan, M., Nunez, C. M., Kuchenbecker, K. J.

Closing the Loop in Minimally Supervised Human-Robot Interaction: Formative and Summative Feedback

Scientific Reports, 14(10564):1-18, May 2024 (article)

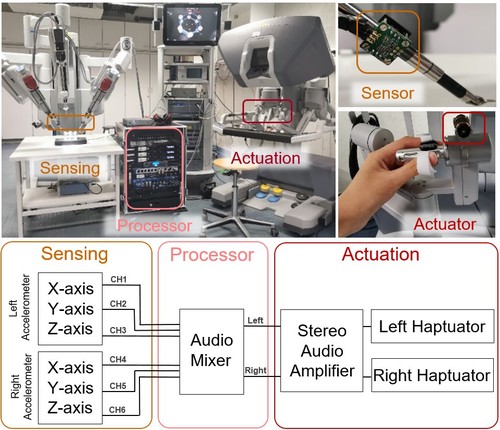

Gong, Y., Husin, H. M., Erol, E., Ortenzi, V., Kuchenbecker, K. J.

AiroTouch: Enhancing Telerobotic Assembly through Naturalistic Haptic Feedback of Tool Vibrations

Frontiers in Robotics and AI, 11, pages: 1-15, May 2024 (article)

Mohan, M., Mat Husin, H., Kuchenbecker, K. J.

Expert Perception of Teleoperated Social Exercise Robots

In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction (HRI), pages: 769-773, Boulder, USA, March 2024, Late-Breaking Report (LBR) (5 pages) presented at the IEEE/ACM International Conference on Human-Robot Interaction (HRI) (inproceedings)

Allemang–Trivalle, A., Donjat, J., Bechu, G., Coppin, G., Chollet, M., Klaproth, O. W., Mitschke, A., Schirrmann, A., Cao, C. G. L.

Modeling Fatigue in Manual and Robot-Assisted Work for Operator 5.0

IISE Transactions on Occupational Ergonomics and Human Factors, 12(1-2):135-147, March 2024 (article)

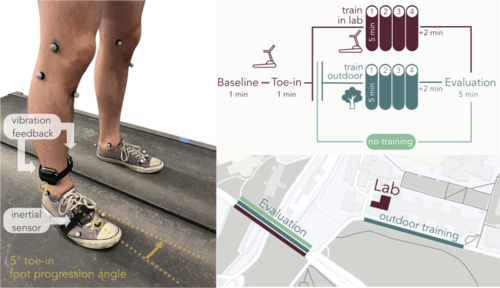

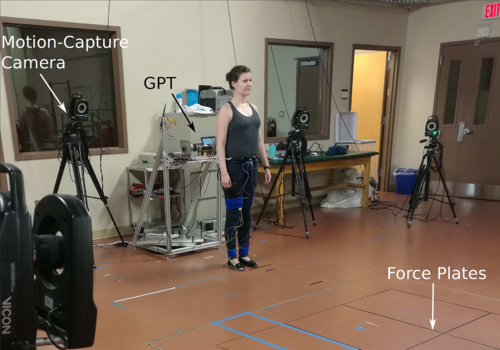

Rokhmanova, N., Pearl, O., Kuchenbecker, K. J., Halilaj, E.

IMU-Based Kinematics Estimation Accuracy Affects Gait Retraining Using Vibrotactile Cues

IEEE Transactions on Neural Systems and Rehabilitation Engineering, 32, pages: 1005-1012, February 2024 (article)

Fitter, N. T., Mohan, M., Preston, R. C., Johnson, M. J., Kuchenbecker, K. J.

How Should Robots Exercise with People? Robot-Mediated Exergames Win with Music, Social Analogues, and Gameplay Clarity

Frontiers in Robotics and AI, 10(1155837):1-18, January 2024 (article)

Khojasteh, B., Shao, Y., Kuchenbecker, K. J.

Robust Surface Recognition with the Maximum Mean Discrepancy: Degrading Haptic-Auditory Signals through Bandwidth and Noise

IEEE Transactions on Haptics, 17(1):58-65, January 2024, Presented at the IEEE Haptics Symposium (article)

2023

L’Orsa, R., Lama, S., Westwick, D., Sutherland, G., Kuchenbecker, K. J.

Towards Semi-Automated Pleural Cavity Access for Pneumothorax in Austere Environments

Acta Astronautica, 212, pages: 48-53, November 2023 (article)

Allemang–Trivalle, A.

Enhancing Surgical Team Collaboration and Situation Awareness through Multimodal Sensing

In Proceedings of the ACM International Conference on Multimodal Interaction, pages: 716-720, Extended abstract (5 pages) presented at the ACM International Conference on Multimodal Interaction (ICMI) Doctoral Consortium, Paris, France, October 2023 (inproceedings)

Burns, R. B., Ojo, F., Kuchenbecker, K. J.

Wear Your Heart on Your Sleeve: Users Prefer Robots with Emotional Reactions to Touch and Ambient Moods

In Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), pages: 1914-1921, Busan, South Korea, August 2023 (inproceedings)

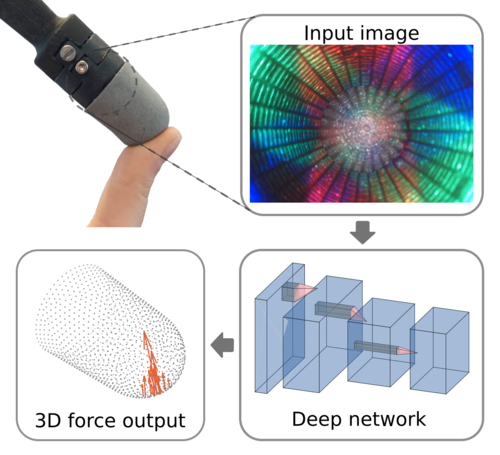

Andrussow, I., Sun, H., Kuchenbecker, K. J., Martius, G.

Minsight: A Fingertip-Sized Vision-Based Tactile Sensor for Robotic Manipulation

Advanced Intelligent Systems, 5(8), August 2023, Inside back cover (article)

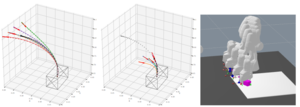

Oh, Y., Passy, J., Mainprice, J.

Augmenting Human Policies using Riemannian Metrics for Human-Robot Shared Control

In Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), pages: 1612-1618, Busan, Korea, August 2023 (inproceedings)

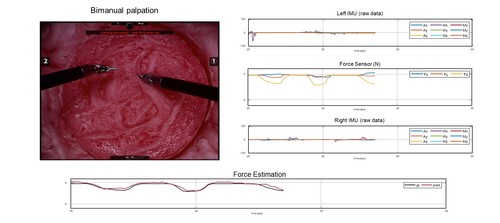

Lee, Y., Husin, H. M., Forte, M., Lee, S., Kuchenbecker, K. J.

Learning to Estimate Palpation Forces in Robotic Surgery From Visual-Inertial Data

IEEE Transactions on Medical Robotics and Bionics, 5(3):496-506, August 2023 (article)

Gong, Y., Javot, B., Lauer, A. P. R., Sawodny, O., Kuchenbecker, K. J.

Naturalistic Vibrotactile Feedback Could Facilitate Telerobotic Assembly on Construction Sites

In Proceedings of the IEEE World Haptics Conference (WHC), pages: 169-175, Delft, The Netherlands, July 2023 (inproceedings)

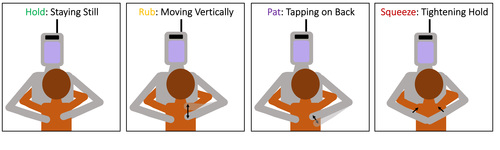

Block, A. E., Seifi, H., Hilliges, O., Gassert, R., Kuchenbecker, K. J.

In the Arms of a Robot: Designing Autonomous Hugging Robots with Intra-Hug Gestures

ACM Transactions on Human-Robot Interaction, 12(2):1-49, June 2023, Special Issue on Designing the Robot Body: Critical Perspectives on Affective Embodied Interaction (article)

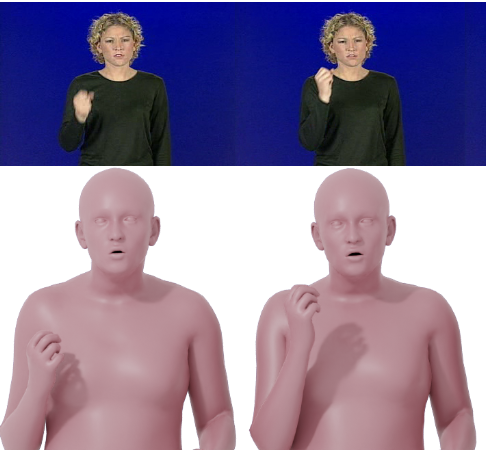

Forte, M., Kulits, P., Huang, C. P., Choutas, V., Tzionas, D., Kuchenbecker, K. J., Black, M. J.

Reconstructing Signing Avatars from Video Using Linguistic Priors

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pages: 12791-12801, CVPR 2023, June 2023 (inproceedings)

Gertler, I., Serhat, G., Kuchenbecker, K. J.

Generating Clear Vibrotactile Cues with a Magnet Embedded in a Soft Finger Sheath

Soft Robotics, 10(3):624-635, June 2023 (article)

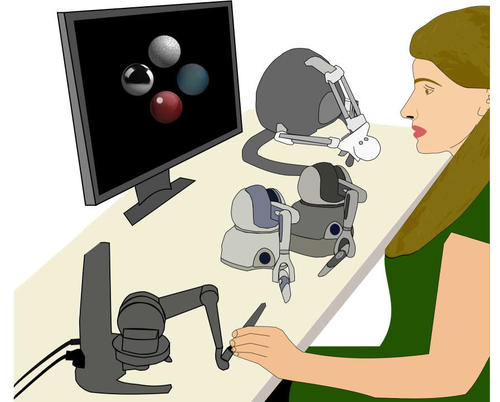

Fazlollahi, F., Kuchenbecker, K. J.

Haptify: A Measurement-Based Benchmarking System for Grounded Force-Feedback Devices

IEEE Transactions on Robotics, 39(2):1622-1636, April 2023 (article)

Brown, J. D., Kuchenbecker, K. J.

Effects of Automated Skill Assessment on Robotic Surgery Training

The International Journal of Medical Robotics and Computer Assisted Surgery, 19(2):e2492, April 2023 (article)

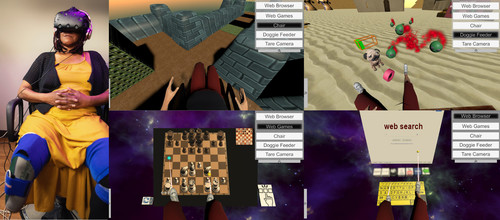

Spiers, A. J., Young, E., Kuchenbecker, K. J.

The S-BAN: Insights into the Perception of Shape-Changing Haptic Interfaces via Virtual Pedestrian Navigation

ACM Transactions on Computer-Human Interaction, 30(1):1-31, March 2023 (article)

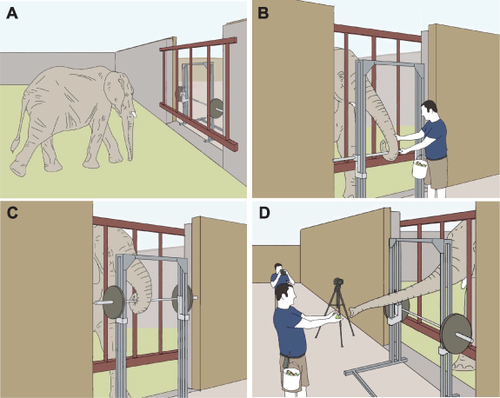

Schulz, A., Reidenberg, J., Wu, J. N., Tang, C. Y., Seleb, B., Mancebo, J., Elgart, N., Hu, D.

Elephant trunks use an adaptable prehensile grip

Bioinspiration and Biomimetics, 18(2), February 2023 (article)

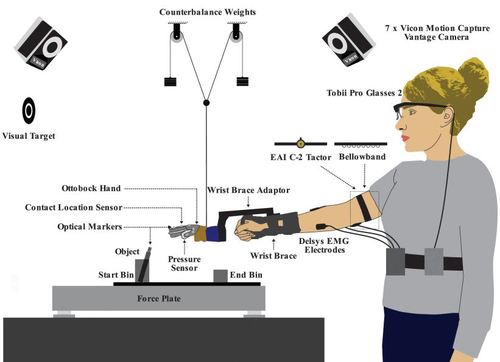

Thomas, N., Fazlollahi, F., Kuchenbecker, K. J., Brown, J. D.

The Utility of Synthetic Reflexes and Haptic Feedback for Upper-Limb Prostheses in a Dexterous Task Without Direct Vision

IEEE Transactions on Neural Systems and Rehabilitation Engineering, 31, pages: 169-179, January 2023 (article)

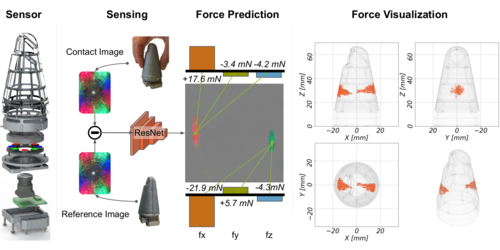

Lee, H., Sun, H., Park, H., Serhat, G., Javot, B., Martius, G., Kuchenbecker, K. J.

Predicting the Force Map of an ERT-Based Tactile Sensor Using Simulation and Deep Networks

IEEE Transactions on Automation Science and Engineering, 20(1):425-439, January 2023 (article)

2022

Zruya, O., Sharon, Y., Kossowsky, H., Forni, F., Geftler, A., Nisky, I.

A New Power Law Linking the Speed to the Geometry of Tool-Tip Orientation in Teleoperation of a Robot-Assisted Surgical System

IEEE Robotics and Automation Letters, 7(4):10762-10769, October 2022 (article)

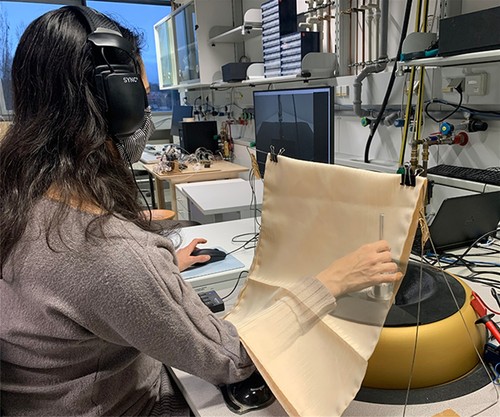

Richardson, B. A., Vardar, Y., Wallraven, C., Kuchenbecker, K. J.

Learning to Feel Textures: Predicting Perceptual Similarities from Unconstrained Finger-Surface Interactions

IEEE Transactions on Haptics, 15(4):705-717, October 2022, Benjamin A. Richardson and Yasemin Vardar contributed equally to this publication. (article)

L’Orsa, R., Lama, S., Westwick, D., Sutherland, G., Kuchenbecker, K. J.

Towards Semi-Automated Pleural Cavity Access for Pneumothorax in Austere Environments

In Proceedings of the International Astronautical Congress (IAC), pages: 1-7, Paris, France, September 2022 (inproceedings)

Allemang–Trivalle, A., Eden, J., Ivanova, E., Huang, Y., Burdet, E.

How Long Does It Take to Learn Trimanual Coordination?

In Proceedings of the IEEE International Conference on Robot and Human Interactive Communication (RO-MAN) , pages: 211-216, Napoli, Italy, August 2022 (inproceedings)

Machaca, S., Cao, E., Chi, A., Adrales, G., Kuchenbecker, K. J., Brown, J. D.

Wrist-Squeezing Force Feedback Improves Accuracy and Speed in Robotic Surgery Training

In Proceedings of the IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob), pages: 1-8, Seoul, Korea, August 2022 (inproceedings)

Allemang–Trivalle, A., Eden, J., Huang, Y., Ivanova, E., Burdet, E.

Comparison of Human Trimanual Performance Between Independent and Dependent Multiple-Limb Training Modes

In Proceedings of the IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob), Seoul, Korea, August 2022 (inproceedings)

Serhat, G., Vardar, Y., Kuchenbecker, K. J.

Contact Evolution of Dry and Hydrated Fingertips at Initial Touch

PLOS ONE, 17(7):e0269722, July 2022, Gokhan Serhat and Yasemin Vardar contributed equally to this publication. (article)

Lee, H., Tombak, G. I., Park, G., Kuchenbecker, K. J.

Perceptual Space of Algorithms for Three-to-One Dimensional Reduction of Realistic Vibrations

IEEE Transactions on Haptics, 15(3):521-534, July 2022 (article)

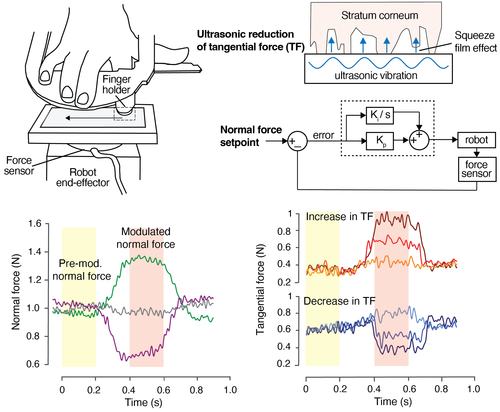

Gueorguiev, D., Lambert, J., Thonnard, J., Kuchenbecker, K. J.

Normal and Tangential Forces Combine to Convey Contact Pressure During Dynamic Tactile Stimulation

Scientific Reports, 12, pages: 8215, May 2022 (article)

Rokhmanova, N., Kuchenbecker, K. J., Shull, P. B., Ferber, R., Halilaj, E.

Predicting Knee Adduction Moment Response to Gait Retraining with Minimal Clinical Data

PLOS Computational Biology, 18(5):e1009500, May 2022 (article)

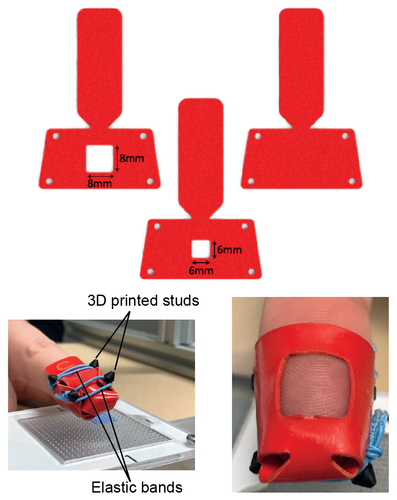

Gueorguiev, D., Javot, B., Spiers, A., Kuchenbecker, K. J.

Larger Skin-Surface Contact Through a Fingertip Wearable Improves Roughness Perception

In Haptics: Science, Technology, Applications, pages: 171-179, Lecture Notes in Computer Science, 13235, (Editors: Seifi, Hasti and Kappers, Astrid M. L. and Schneider, Oliver and Drewing, Knut and Pacchierotti, Claudio and Abbasimoshaei, Alireza and Huisman, Gijs and Kern, Thorsten A.), Springer, Cham, 13th International Conference on Human Haptic Sensing and Touch Enabled Computer Applications (EuroHaptics 2022), May 2022 (inproceedings)

Forte, M., Gourishetti, R., Javot, B., Engler, T., Gomez, E. D., Kuchenbecker, K. J.

Design of Interactive Augmented Reality Functions for Robotic Surgery and Evaluation in Dry-Lab Lymphadenectomy

The International Journal of Medical Robotics and Computer Assisted Surgery, 18(2):e2351, April 2022 (article)

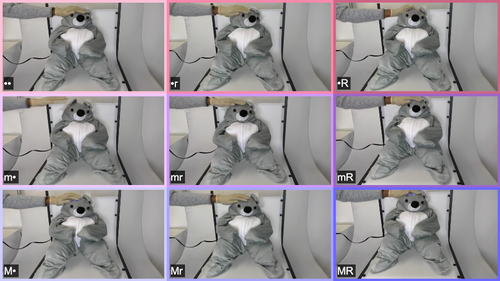

Burns, R. B., Lee, H., Seifi, H., Faulkner, R., Kuchenbecker, K. J.

Endowing a NAO Robot with Practical Social-Touch Perception

Frontiers in Robotics and AI, 9, pages: 840335, April 2022 (article)

Ortenzi, V., Filipovica, M., Abdlkarim, D., Pardi, T., Takahashi, C., Wing, A. M., Luca, M. D., Kuchenbecker, K. J.

Robot, Pass Me the Tool: Handle Visibility Facilitates Task-Oriented Handovers

In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction (HRI), pages: 256-264, March 2022, Valerio Ortenzi and Maija Filipovica contributed equally to this publication. (inproceedings)

Park, K., Lee, H., Kuchenbecker, K. J., Kim, J.

Adaptive Optimal Measurement Algorithm for ERT-Based Large-Area Tactile Sensors

IEEE/ASME Transactions on Mechatronics, 27(1):304-314, February 2022 (article)

Sun, H., Kuchenbecker, K. J., Martius, G.

A Soft Thumb-Sized Vision-Based Sensor with Accurate All-Round Force Perception

Nature Machine Intelligence, 4(2):135-145, February 2022 (article)

Gourishetti, R., Kuchenbecker, K. J.

Evaluation of Vibrotactile Output from a Rotating Motor Actuator

IEEE Transactions on Haptics, 15(1):39-44, January 2022, Presented at the IEEE Haptics Symposium (article)

2021

Ambron, E., Buxbaum, L. J., Miller, A., Stoll, H., Kuchenbecker, K. J., Coslett, H. B.

Virtual Reality Treatment Displaying the Missing Leg Improves Phantom Limb Pain: A Small Clinical Trial

Neurorehabilitation and Neural Repair, 35(12):1100-1111, December 2021 (article)

Hu, S., Mendonca, R., Johnson, M. J., Kuchenbecker, K. J.

Robotics for Occupational Therapy: Learning Upper-Limb Exercises From Demonstrations

IEEE Robotics and Automation Letters, 6(4):7781-7788, October 2021 (article)

Hu, S., Fjeld, K., Vasudevan, E. V., Kuchenbecker, K. J.

A Brake-Based Overground Gait Rehabilitation Device for Altering Propulsion Impulse Symmetry

Sensors, 21(19):6617, October 2021 (article)

Thomas, N., Fazlollahi, F., Brown, J. D., Kuchenbecker, K. J.

Sensorimotor-Inspired Tactile Feedback and Control Improve Consistency of Prosthesis Manipulation in the Absence of Direct Vision

In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages: 6174-6181, Prague, Czech Republic, September 2021 (inproceedings)