Department Talks

Designing Mobile Robots for Physical Interaction with Sandy Terrains

- 18 March 2024 • 16:00—17:00

- Dr. Hannah Stuart

- Hybrid - Webex plus in-person attendance in Copper (2R04)

One day, robots will widely support exploration and development of unstructured natural environments. Much of the work I will present in this lecture is supported by NASA and is focused on robot design research relevant to accessing the surfaces of the Moon or Mars. Tensile elements appear repeatedly across the wide array of missions envisioned to support human or robotic exploration and habitation of the Moon. With a single secured tether either rovers or astronauts, or both, could belay down into steep lunar craters for the exploration of permanently shadowed regions; the tether prevents catastrophic slipping or falling and mitigates risk while we search for water resources. Like a tent that relies on tensioned ropes to sustain its structure, tensegrity-based antennae, dishes, and habitations can be made large and strong using very lightweight materials. We ask: Where does the tension in these lightweight systems go? Ultimately, these concepts will require anchors that attach cables autonomously, securely, and reliably to the surrounding regolith to react tensile forces. Thus, the development of new autonomous burrowing and anchoring technologies and modeling techniques to guide design and adoption is critical across multiple space relevant programs. Yet burrowing and anchoring problems are hard for multiple reasons, which remain fundamental areas of discovery. Our goal is to understand how the mechanics of granular and rocky interaction influences the design and control small-scale robotic systems for such forceful manipulations. I will present new mobility and anchoring strategies to enable small robots to resist, or "tug," massive loads in loose terrain, like regolith. The idea is that multiple tethered agents can work together to perform large-scale manipulations, even where traction and gravity is low. Resulting generalizable methods for rapidly modeling granular interactions also inform new mobility gaits to move over, through, or under loose sand more efficiently.

Organizers: Katherine J. Kuchenbecker

- Marie Großmann

- Hybrid - Webex plus in-person attendance in 5N18

The sensory perception of the world, including seeing and hearing, tasting and smelling,touching and feeling, are necessary social skills to become a social counterpart. In this context,the construction of a perceptible technology is an intersection where technical artifacts have the capability to interact and sense their environment. Sensors as technical artifacts not only measure various (physical) states, with their presented results influencing perceptions and actions, but they also undergo technical and computational processing. Sensors generate differences by capturing and measuring variations in their surroundings. In this talk, I will share insights from my qualitative social research in the lab, using a sociological engagement with technology, materiality, and science research as a starting point to sharpen a sociological perspective on constructing technical perceptions. The focus will lie on how knowledge on perceptions is implemented in technology and materiality when constructing sensors.

Organizers: Katherine J. Kuchenbecker

Biomechanics and Control of Agile Locomotion: from Walking to Jumping

- 07 December 2023 • 11:30—12:30

- Dr. Janneke Schwaner

- Hybrid - Webex plus in-person attendance in 5N18

Animals seem to effortlessly navigate complex terrain. This is in stark contrast with even the most advanced robot, illustrating that navigating complex terrain is by no means trivial. Humans’ neuromusculoskeletal system is equipped with two key mechanisms that allow us to recover from unexpected perturbations: muscle intrinsic properties and sensory-driven feedback control. We used unique in vivo and in situ approaches to explore how guinea fowl (Numida meleagris) integrate these two mechanisms to maintain robust locomotion. For example, our work showed a modular task-level control of leg length and leg angular trajectory during navigating speed perturbations while walking, with different neuromechanical control and perturbation sensitivity in each actuation mode. We also discovered gait-specific control mechanisms in walking and running over obstacles. Additionally, by combining in vivo and in situ experimental approaches, we found that guinea fowl LG muscles do not operate at optimal muscle lengths during force production during walking and running, providing a safety factor to potential unexpected perturbations. Lastly, we will also highlight some work showing how kangaroo rats circumvent mechanical limitations of skeletal muscles to jump as well as how these animals overcome angular momentum limitations during aerial reorientation during predator escape leaps. Elucidating frameworks of functions, adaptability, and individual variation across neuromuscular systems will provide a stepping-stone for understanding fundamental muscle mechanics, sensory feedback, and neuromuscular health. Additionally, this research has the potential to reveal the functional significance of individual morphological, physiological, and neuromuscular variation in relation to locomotion. This knowledge can subsequently inform individualized rehabilitation approaches and treatment of neuromuscular conditions, such as stroke-related motor impairments, as they require an integrated understanding of dynamic interactions between musculoskeletal mechanics and sensorimotor control. This work also provides foundational knowledge for the development of dynamic assistive devices and robots that can navigate through complex terrains.

Organizers: Katherine J. Kuchenbecker Andrew Schulz

- Dr. Diego Ospina

- Hybrid - Webex plus in-person attendance in 5N18

Project neuroArm was established in 2002, with the idea of building the world’s first robot for brain surgery and stereotaxy. With the launch (2007) and integration of the neuroArm robot in the neurosurgical operating room (May 2008), the project continues to spawn newer technological innovations, advance tele-robotics through sensors and AI, and intelligent surgical systems towards improving safety of surgery. This talk will provide a high-level overview of two such technologies the team at Project neuroArm is currently developing and deploying: i) neuroArm+HD, a medical-grade sensory immersive workstation designed to enhance learning, performance, and safety in robot-assisted microsurgery and tele-operations; and ii) SmartForceps, a sensorized surgical bipolar forceps for real-time recording, displaying, monitoring, and uploading of tool-tissue interaction forces during surgery.

Organizers: Katherine J. Kuchenbecker Rachael Lorsa

- Andreea Tulbure

- Hybrid - Webex plus in-person attendance in 5N18

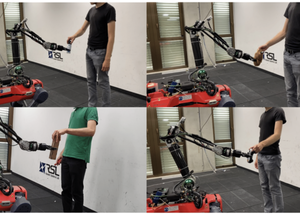

Deploying perception and control modules for handovers is challenging because they require a high degree of robustness and generalizability to work reliably for a diversity of objects and situations, but also adaptivity to adjust to individual preferences. On legged robots, deployment is particularly challenging because of the limited computational resources and the additional sensing noise resulting from locomotion. In this talk, I will discuss how we tackle some of these challenges, by first introducing our perception framework and discussing the insights of the first human-robot handover user study with legged manipulators. Furthermore, I will show how we combine imitation and reinforcement learning to achieve some degree of adaptivity during handovers. Finally, I will present our work in which the robot takes into account the post-handover task of the collaboration partner when handing over an object. This is beneficial for situations where the human range of motion is constrained during the handover or time is crucial.

Organizers: Katherine J. Kuchenbecker

Gesture-Based Nonverbal Interaction for Exercise Robots

- 09 October 2023 • 13:30—14:30

- Mayumi Mohan

- Webex plus in-person attendance in N3.022 in the Tübingen site of MPI-IS

When teaching or coaching, humans augment their words with carefully timed hand gestures, head and body movements, and facial expressions to provide feedback to their students. Robots, however, rarely utilize these nuanced cues. A minimally supervised social robot equipped with these abilities could support people in exercising, physical therapy, and learning new activities. This thesis examines how the intuitive power of human gestures can be harnessed to enhance human-robot interaction. To address this question, this research explores gesture-based interactions to expand the capabilities of a socially assistive robotic exercise coach, investigating the perspectives of both novice users and exercise-therapy experts. This thesis begins by concentrating on the user's engagement with the robot, analyzing the feasibility of minimally supervised gesture-based interactions. This exploration seeks to establish a framework in which robots can interact with users in a more intuitive and responsive manner. The investigation then shifts its focus toward the professionals who are integral to the success of these innovative technologies: the exercise-therapy experts. Roboticists face the challenge of translating the knowledge of these experts into robotic interactions. We address this challenge by developing a teleoperation algorithm that can enable exercise therapists to create customized gesture-based interactions for a robot. Thus, this thesis lays the groundwork for dynamic gesture-based interactions in minimally supervised environments, with implications for not only exercise-coach robots but also broader applications in human-robot interaction.

Organizers: Mayumi Mohan Katherine J. Kuchenbecker

- Prof. Dangxiao Wang

- Hybrid - Webex plus in-person attendance in 5N18

Current virtual reality systems mainly rely on hand-held controllers, which can only provide six-dimensional motion tracking and vibrotactile feedback to users. One promising solution for improving immersion is to develop wearable systems that can capture over 20-DoF hand motion and provide distributed kinesthetic and tactile feedback to the skin. In this talk, I will discuss the technical challenges for developing high-fidelity wearable haptic systems, and then introduce our work on haptic gloves, hand-based haptic rendering algorithms, and the applications of wearable haptic systems in medical and space-station fields.

Organizers: Katherine J. Kuchenbecker

Biomechanics, Estimation, and Augmentation of Human Balance during Perturbed Locomotion

- 09 June 2023 • 11:00—12:00

- Jennifer Leestma

- Hybrid - Webex plus in-person attendance in 5N18

Recent advances in wearable robotics have unveiled the potential of exoskeletons to augment human locomotion across a variety of environments. However, few studies have evaluated the capability of these devices to augment human balance during unstable locomotion. In this talk, I will discuss how we’re threading together biomechanics, mechanical and mechatronic design, wearable sensor-informed machine learning, and controls to work towards a balance-augmenting exoskeleton. I’ll start by discussing human balance, recovery strategies, and some of the most challenging destabilizing scenarios that we have identified through our recent studies. I will also discuss how we are utilizing machine learning to estimate and predict human biomechanical outcomes in these destabilizing environments. Lastly, I will introduce our ongoing work to integrate biomechanics and machine learning to develop intelligent exoskeleton control algorithms for balance augmentation.

Organizers: Katherine J. Kuchenbecker Andrew Schulz

- Dr. Yanpei Huang

- Hybrid - Webex plus in-person attendance in 5N18

Many surgical tasks require using three or more tools simultaneously. Currently, surgeries are typically performed by a main surgeon and an assistant, where it is known that their performance can be affected by miscommunications. Providing the surgeon with tools and techniques to control three or four surgical tools by themself would avoid miscommunication and could improve the outcome of surgery. However, the current interfaces allowing a surgeon to control several tools offer only limited precision, dexterity and intuitiveness. Therefore, I developed a dedicated foot interface and techniques that allow an operator to control three tools intuitively and continuously. Using individual motion patterns, the operator could simultaneously control a novel flexible endoscope and its two tools, as was tested in an intervention on a pig stomach. In addition, I used my interface to systematically investigate the main characteristics, opportunities and limitations of movement augmentation for "three-handed manipulation". In my presentation, I will present these works and outline the opportunities that movement augmentation opens up for applications in industry and medicine, especially for solo surgery.

Organizers: Katherine J. Kuchenbecker

- Vani Sundaram

- Hybrid - Webex plus in-person attendance in 5N18

Due to the deformable and compliant nature of soft systems, there has been an ongoing need to address the challenges of state estimation and environmental interaction using embedded and distributed sensing. In this talk, I will be sharing the steps I took to develop reliable soft sensors that can be scaled up to control the movements and interactions of various multiunit, electrostatic systems. Initially, I focused on characterizing, sensing, and controlling a single HASEL actuator. However, during this process, we discovered issues with the current method of sensing the movement of these electrostatic actuators, which prevented us from creating larger systems. To overcome this challenge, I developed a magnetic sensing mechanism that detects the deformation of these actuators and allows us to scale up the number of units in the system. With this approach, we were able to successfully create and control several multi-unit systems. Furthermore, we also demonstrate the benefits of magnetic sensing by applying it to a more widely studied type of soft system, which is a pneumatic-based soft gripper. Overall, my talk will provide insights into the development of reliable soft sensors that can be scaled up to control complex multiunit systems.

Organizers: Katherine J. Kuchenbecker