2024

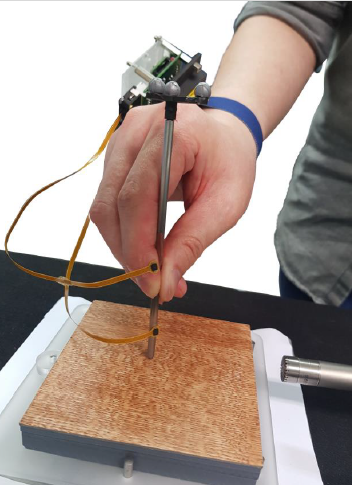

L’Orsa, R., Bisht, A., Yu, L., Murari, K., Westwick, D. T., Sutherland, G. R., Kuchenbecker, K. J.

Reflectance Outperforms Force and Position in Model-Free Needle Puncture Detection

In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, USA, July 2024 (inproceedings) Accepted

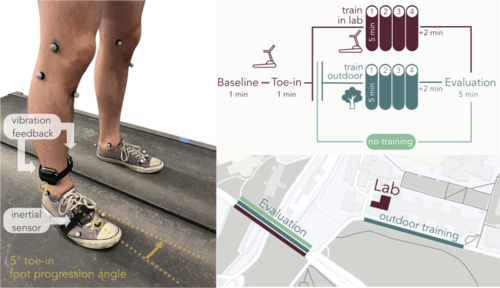

Rokhmanova, N., Martus, J., Faulkner, R., Fiene, J., Kuchenbecker, K. J.

GaitGuide: A Wearable Device for Vibrotactile Motion Guidance

Workshop paper (3 pages) presented at the ICRA Workshop on Advancing Wearable Devices and Applications Through Novel Design, Sensing, Actuation, and AI, Yokohama, Japan, May 2024 (misc) Accepted

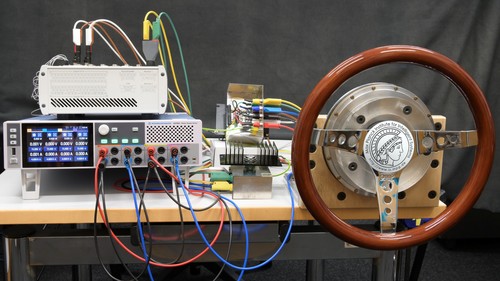

Javot, B., Nguyen, V. H., Ballardini, G., Kuchenbecker, K. J.

CAPT Motor: A Strong Direct-Drive Rotary Haptic Interface

Hands-on demonstration presented at the IEEE Haptics Symposium, Long Beach, USA, April 2024 (misc)

Sanchez-Tamayo, N., Yoder, Z., Ballardini, G., Rothemund, P., Keplinger, C., Kuchenbecker, K. J.

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Multimodal Haptic Feedback

Extended abstract (1 page) presented at the IEEE RoboSoft Workshop on Multimodal Soft Robots for Multifunctional Manipulation, Locomotion, and Human-Machine Interaction, San Diego, USA, April 2024 (misc)

Fazlollahi, F., Seifi, H., Ballardini, G., Taghizadeh, Z., Schulz, A., MacLean, K. E., Kuchenbecker, K. J.

Quantifying Haptic Quality: External Measurements Match Expert Assessments of Stiffness Rendering Across Devices

Work-in-progress paper (2 pages) presented at the IEEE Haptics Symposium, Long Beach, USA, April 2024 (misc)

Sanchez-Tamayo, N., Yoder, Z., Ballardini, G., Rothemund, P., Keplinger, C., Kuchenbecker, K. J.

Cutaneous Electrohydraulic Wearable Devices for Expressive and Salient Haptic Feedback

Hands-on demonstration presented at the IEEE Haptics Symposium, Long Beach, USA, April 2024 (misc)

Serhat, G., Kuchenbecker, K. J.

Fingertip Dynamic Response Simulated Across Excitation Points and Frequencies

Biomechanics and Modeling in Mechanobiology, April 2024 (article) Accepted

Sanchez-Tamayo, N., Yoder, Z., Ballardini, G., Rothemund, P., Keplinger, C., Kuchenbecker, K. J.

Demonstration: Cutaneous Electrohydraulic (CUTE) Wearable Devices for Expressive and Salient Haptic Feedback

Hands-on demonstration presented at the IEEE RoboSoft Conference, San Diego, USA, April 2024 (misc)

Mohan, M., Mat Husin, H., Kuchenbecker, K. J.

Expert Perception of Teleoperated Social Exercise Robots

In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction (HRI), pages: 769-773, Boulder, USA, March 2024, Late-Breaking Report (LBR) (5 pages) presented at the IEEE/ACM International Conference on Human-Robot Interaction (HRI) (inproceedings)

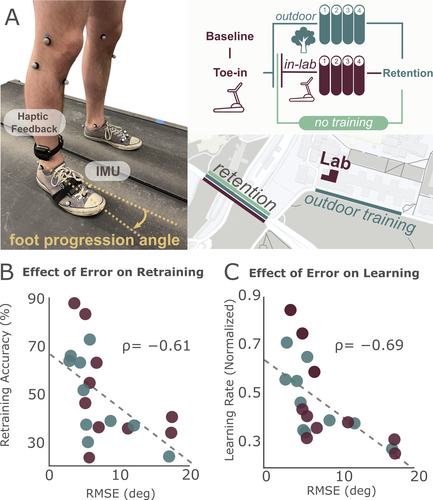

Rokhmanova, N., Pearl, O., Kuchenbecker, K. J., Halilaj, E.

IMU-Based Kinematics Estimation Accuracy Affects Gait Retraining Using Vibrotactile Cues

IEEE Transactions on Neural Systems and Rehabilitation Engineering, 32, pages: 1005-1012, February 2024 (article)

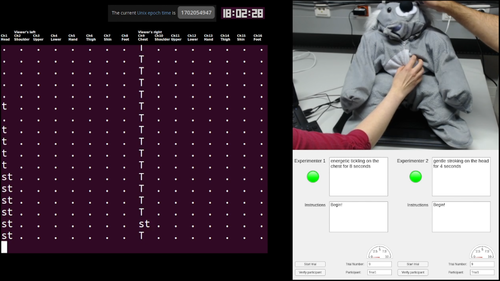

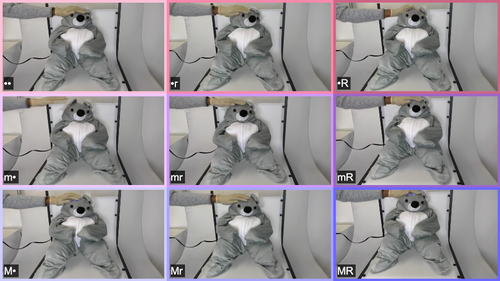

Burns, R.

Creating a Haptic Empathetic Robot Animal That Feels Touch and Emotion

University of Tübingen, Tübingen, Germany, February 2024, Department of Computer Science (phdthesis)

Schulz, A., Serhat, G., Kuchenbecker, K. J.

Adapting a High-Fidelity Simulation of Human Skin for Comparative Touch Sensing in the Elephant Trunk

Abstract presented at the Society for Integrative and Comparative Biology Annual Meeting (SICB), Seattle, USA, January 2024 (misc)

Khojasteh, B., Shao, Y., Kuchenbecker, K. J.

MPI-10: Haptic-Auditory Measurements from Tool-Surface Interactions

Dataset published as a companion to the journal article "Robust Surface Recognition with the Maximum Mean Discrepancy: Degrading Haptic-Auditory Signals through Bandwidth and Noise" in IEEE Transactions on Haptics, January 2024 (misc)

Fitter, N. T., Mohan, M., Preston, R. C., Johnson, M. J., Kuchenbecker, K. J.

How Should Robots Exercise with People? Robot-Mediated Exergames Win with Music, Social Analogues, and Gameplay Clarity

Frontiers in Robotics and AI, 10(1155837):1-18, January 2024 (article)

Schulz, A., Kaufmann, L., Brecht, M., Richter, G., Kuchenbecker, K. J.

Whiskers That Don’t Whisk: Unique Structure From the Absence of Actuation in Elephant Whiskers

Abstract presented at the Society for Integrative and Comparative Biology Annual Meeting (SICB), Seattle, USA, January 2024 (misc)

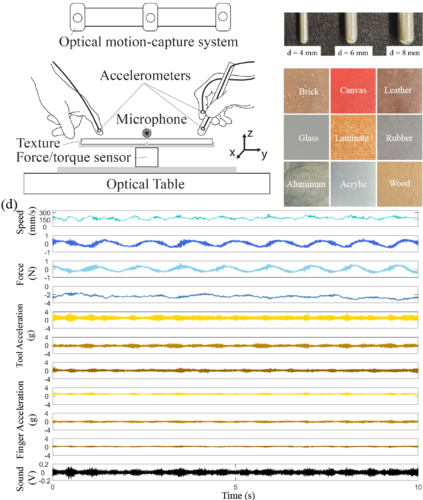

Khojasteh, B., Shao, Y., Kuchenbecker, K. J.

Robust Surface Recognition with the Maximum Mean Discrepancy: Degrading Haptic-Auditory Signals through Bandwidth and Noise

IEEE Transactions on Haptics, 17(1):58-65, January 2024, Presented at the IEEE Haptics Symposium (article)

Landin, N., Romano, J. M., McMahan, W., Kuchenbecker, K. J.

Discrete Fourier Transform Three-to-One (DFT321): Code

MATLAB code of discrete fourier transform three-to-one (DFT321), 2024 (misc)

2023

L’Orsa, R., Lama, S., Westwick, D., Sutherland, G., Kuchenbecker, K. J.

Towards Semi-Automated Pleural Cavity Access for Pneumothorax in Austere Environments

Acta Astronautica, 212, pages: 48-53, November 2023 (article)

Mohan, M.

Gesture-Based Nonverbal Interaction for Exercise Robots

University of Tübingen, Tübingen, Germany, October 2023, Department of Computer Science (phdthesis)

Khojasteh, B., Shao, Y., Kuchenbecker, K. J.

Seeking Causal, Invariant, Structures with Kernel Mean Embeddings in Haptic-Auditory Data from Tool-Surface Interaction

Workshop paper (4 pages) presented at the IROS Workshop on Causality for Robotics: Answering the Question of Why, Detroit, USA, October 2023 (misc)

Allemang–Trivalle, A.

Enhancing Surgical Team Collaboration and Situation Awareness through Multimodal Sensing

Proceedings of the ACM International Conference on Multimodal Interaction (ICMI), pages: 716-720, Extended abstract (5 pages) presented at the ACM International Conference on Multimodal Interaction (ICMI) Doctoral Consortium, Paris, France, October 2023 (misc)

Garrofé, G., Schoeffmann, C., Zangl, H., Kuchenbecker, K. J., Lee, H.

NearContact: Accurate Human Detection using Tomographic Proximity and Contact Sensing with Cross-Modal Attention

Extended abstract (4 pages) presented at the International Workshop on Human-Friendly Robotics (HFR), Munich, Germany, September 2023 (misc)

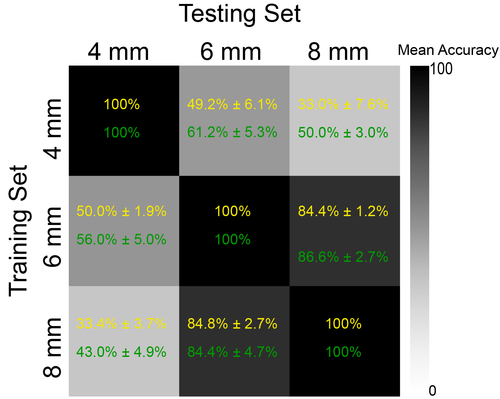

Khojasteh, B., Solowjow, F., Trimpe, S., Kuchenbecker, K. J.

Multimodal Multi-User Surface Recognition with the Kernel Two-Sample Test

IEEE Transactions on Automation Science and Engineering, pages: 1-16, August 2023 (article)

Rokhmanova, N., Pearl, O., Kuchenbecker, K. J., Halilaj, E.

The Role of Kinematics Estimation Accuracy in Learning with Wearable Haptics

Abstract presented at the American Society of Biomechanics (ASB), Knoxville, USA, August 2023 (misc)

Burns, R. B., Ojo, F., Kuchenbecker, K. J.

Wear Your Heart on Your Sleeve: Users Prefer Robots with Emotional Reactions to Touch and Ambient Moods

In Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), pages: 1914-1921, Busan, South Korea, August 2023 (inproceedings)

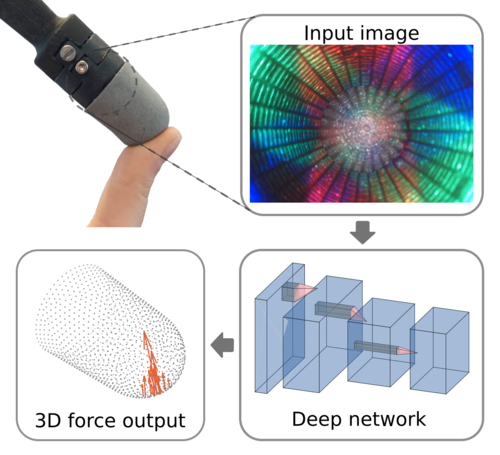

Andrussow, I., Sun, H., Kuchenbecker, K. J., Martius, G.

Minsight: A Fingertip-Sized Vision-Based Tactile Sensor for Robotic Manipulation

Advanced Intelligent Systems, 5(8):2300042, August 2023, Inside back cover (article)

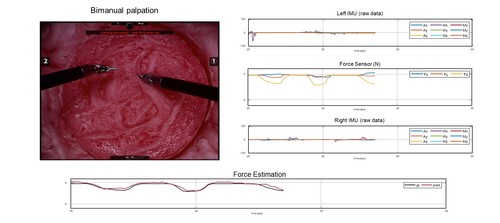

Lee, Y., Husin, H. M., Forte, M., Lee, S., Kuchenbecker, K. J.

Learning to Estimate Palpation Forces in Robotic Surgery From Visual-Inertial Data

IEEE Transactions on Medical Robotics and Bionics, 5(3):496-506, August 2023 (article)

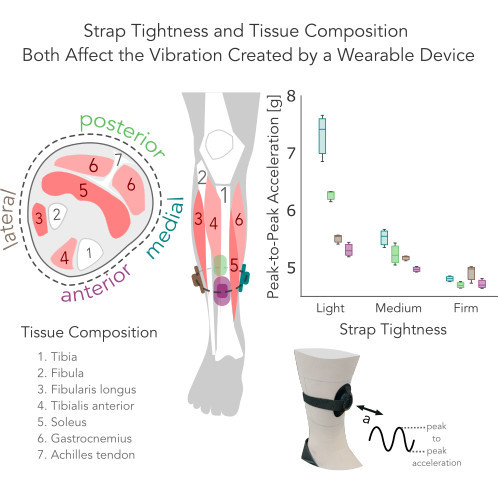

Rokhmanova, N., Faulkner, R., Martus, J., Fiene, J., Kuchenbecker, K. J.

Strap Tightness and Tissue Composition Both Affect the Vibration Created by a Wearable Device

Work-in-progress paper (1 page) presented at the IEEE World Haptics Conference (WHC), Delft, The Netherlands, July 2023 (misc)

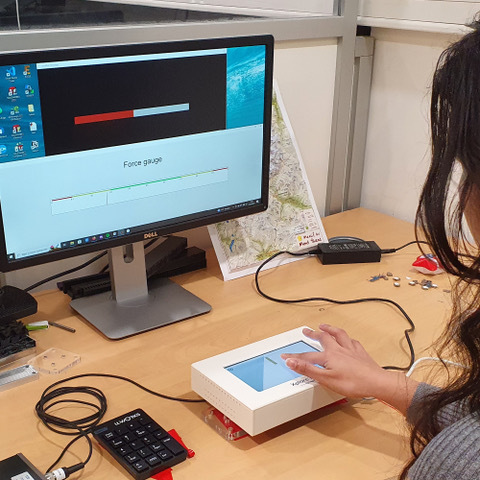

Ballardini, G., Kuchenbecker, K. J.

Toward a Device for Reliable Evaluation of Vibrotactile Perception

Work-in-progress paper (1 page) presented at the IEEE World Haptics Conference (WHC), Delft, The Netherlands, July 2023 (misc)

Khojasteh, B., Solowjow, F., Trimpe, S., Kuchenbecker, K. J.

Multimodal Multi-User Surface Recognition with the Kernel Two-Sample Test: Code

Code published as a companion to the journal article "Multimodal Multi-User Surface Recognition with the Kernel Two-Sample Test" in IEEE Transactions on Automation Science and Engineering, July 2023 (misc)

Fazlollahi, F., Taghizadeh, Z., Kuchenbecker, K. J.

Improving Haptic Rendering Quality by Measuring and Compensating for Undesired Forces

Work-in-progress paper (1 page) presented at the IEEE World Haptics Conference (WHC), Delft, The Netherlands, July 2023 (misc)

Khojasteh, B., Shao, Y., Kuchenbecker, K. J.

Capturing Rich Auditory-Haptic Contact Data for Surface Recognition

Work-in-progress paper (1 page) presented at the IEEE World Haptics Conference (WHC), Delft, The Netherlands, July 2023 (misc)

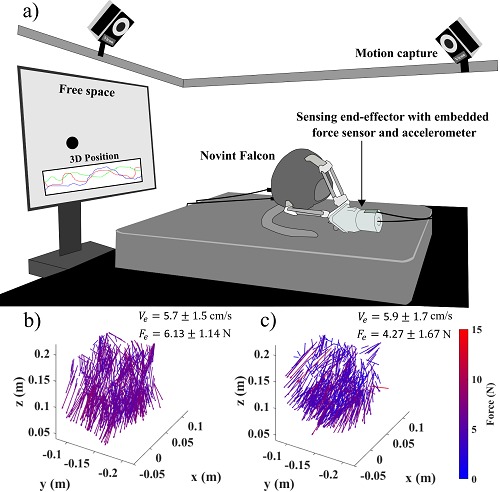

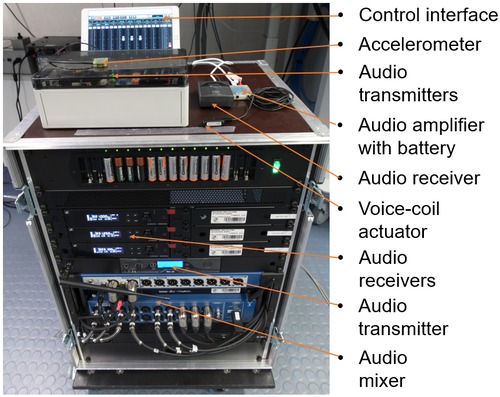

Gong, Y., Javot, B., Lauer, A. P. R., Sawodny, O., Kuchenbecker, K. J.

Naturalistic Vibrotactile Feedback Could Facilitate Telerobotic Assembly on Construction Sites

In Proceedings of the IEEE World Haptics Conference (WHC), pages: 169-175, Delft, The Netherlands, July 2023 (inproceedings)

Gong, Y., Javot, B., Lauer, A. P. R., Sawodny, O., Kuchenbecker, K. J.

AiroTouch: Naturalistic Vibrotactile Feedback for Telerobotic Construction

Hands-on demonstration presented at the IEEE World Haptics Conference, Delft, The Netherlands, July 2023 (misc)

Javot, B., Nguyen, V. H., Ballardini, G., Kuchenbecker, K. J.

CAPT Motor: A Strong Direct-Drive Haptic Interface

Hands-on demonstration presented at the IEEE World Haptics Conference, Delft, The Netherlands, July 2023 (misc)

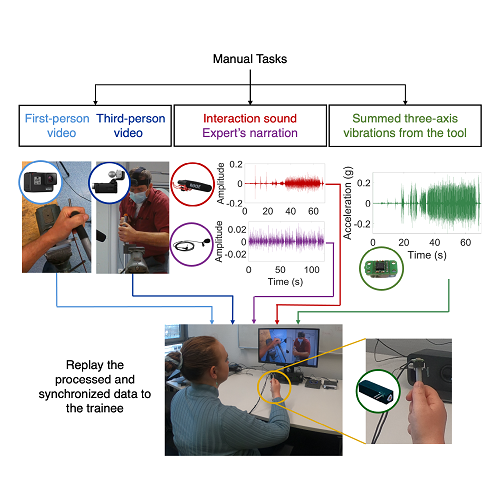

Gourishetti, R., Javot, B., Kuchenbecker, K. J.

Can Recording Expert Demonstrations with Tool Vibrations Facilitate Teaching of Manual Skills?

Work-in-progress paper (1 page) presented at the IEEE World Haptics Conference (WHC), Delft, The Netherlands, July 2023 (misc)

Burns, R. B.

Creating a Haptic Empathetic Robot Animal for Children with Autism

Workshop paper (4 pages) presented at the RSS Pioneers Workshop, Daegu, South Korea, July 2023 (misc)

Gueorguiev, D., Rohou–Claquin, B., Kuchenbecker, K. J.

The Influence of Amplitude and Sharpness on the Perceived Intensity of Isoenergetic Ultrasonic Signals

Work-in-progress paper (1 page) presented at the IEEE World Haptics Conference (WHC), Delft, The Netherlands, July 2023 (misc)

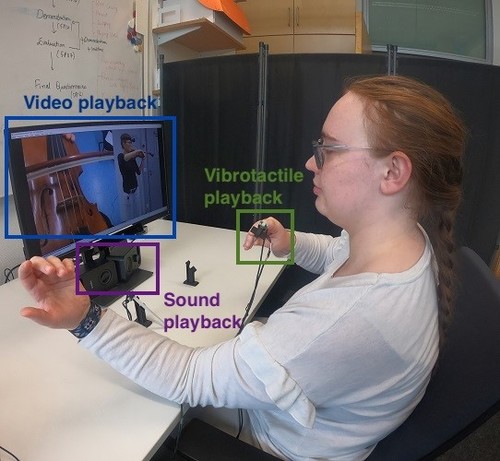

Gourishetti, R., Hughes, A. G., Javot, B., Kuchenbecker, K. J.

Vibrotactile Playback for Teaching Manual Skills from Expert Recordings

Hands-on demonstration presented at the IEEE World Haptics Conference, Delft, The Netherlands, July 2023 (misc)

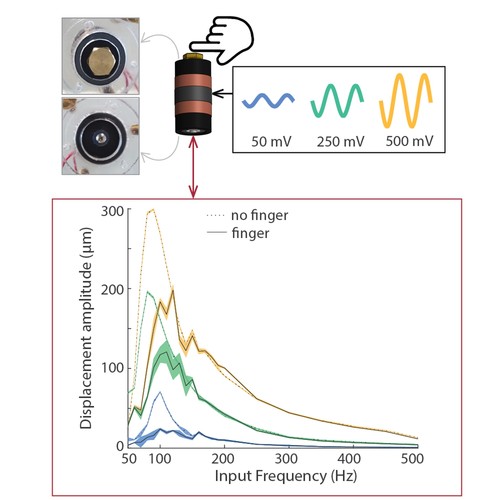

Gertler, I., Serhat, G., Kuchenbecker, K. J.

Generating Clear Vibrotactile Cues with a Magnet Embedded in a Soft Finger Sheath

Soft Robotics, 10(3):624-635, June 2023 (article)

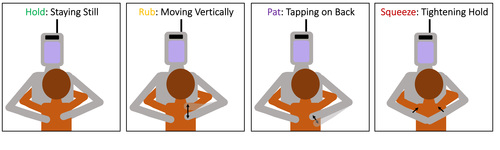

Block, A. E., Seifi, H., Hilliges, O., Gassert, R., Kuchenbecker, K. J.

In the Arms of a Robot: Designing Autonomous Hugging Robots with Intra-Hug Gestures

ACM Transactions on Human-Robot Interaction, 12(2):1-49, June 2023, Special Issue on Designing the Robot Body: Critical Perspectives on Affective Embodied Interaction (article)

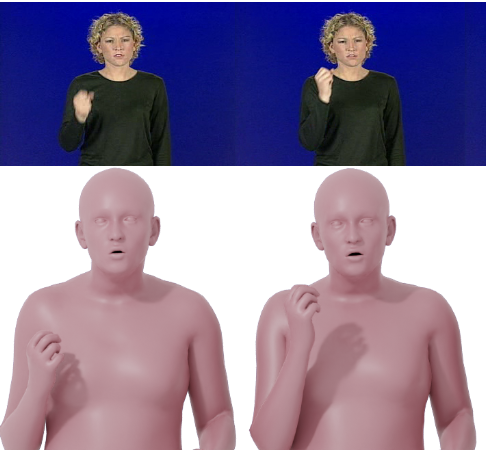

Forte, M., Kulits, P., Huang, C. P., Choutas, V., Tzionas, D., Kuchenbecker, K. J., Black, M. J.

Reconstructing Signing Avatars from Video Using Linguistic Priors

In IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pages: 12791-12801, CVPR 2023, June 2023 (inproceedings)

Gong, Y., Javot, B., Lauer, A. P. R., Sawodny, O., Kuchenbecker, K. J.

Naturalistic Vibrotactile Feedback Could Facilitate Telerobotic Assembly on Construction Sites

Poster presented at the ICRA Workshop on Future of Construction: Robot Perception, Mapping, Navigation, Control in Unstructured and Cluttered Environments, London, UK, May 2023 (misc)

Gong, Y., Tashiro, N., Javot, B., Lauer, A. P. R., Sawodny, O., Kuchenbecker, K. J.

AiroTouch: Naturalistic Vibrotactile Feedback for Telerobotic Construction-Related Tasks

Extended abstract (1 page) presented at the ICRA Workshop on Communicating Robot Learning across Human-Robot Interaction, London, UK, May 2023 (misc)

Caccianiga, G., Nubert, J., Hutter, M., Kuchenbecker., K. J.

3D Reconstruction for Minimally Invasive Surgery: Lidar Versus Learning-Based Stereo Matching

Workshop paper (2 pages) presented at the ICRA Workshop on Robot-Assisted Medical Imaging, London, UK, May 2023 (misc)

Khojasteh, B., Shao, Y., Kuchenbecker, K. J.

Surface Perception through Haptic-Auditory Contact Data

Workshop paper (4 pages) presented at the ICRA Workshop on Embracing Contacts, London, UK, May 2023 (misc)

Mohan, M., Kuchenbecker, K. J.

OCRA: An Optimization-Based Customizable Retargeting Algorithm for Teleoperation

Workshop paper (3 pages) presented at the ICRA Workshop Toward Robot Avatars, London, UK, May 2023 (misc)

Fazlollahi, F., Kuchenbecker, K. J.

Haptify: A Measurement-Based Benchmarking System for Grounded Force-Feedback Devices

IEEE Transactions on Robotics, 39(2):1622-1636, April 2023 (article)

Rokhmanova, N.

Wearable Biofeedback for Knee Joint Health

Extended abstract (5 pages) presented at the ACM SIGCHI Conference on Human Factors in Computing Systems (CHI) Doctoral Consortium, Hamburg, Germany, April 2023 (misc)

Brown, J. D., Kuchenbecker, K. J.

Effects of Automated Skill Assessment on Robotic Surgery Training

The International Journal of Medical Robotics and Computer Assisted Surgery, 19(2):e2492, April 2023 (article)