2024

Cao, C. G. L., Javot, B., Bhattarai, S., Bierig, K., Oreshnikov, I., Volchkov, V. V.

Fiber-Optic Shape Sensing Using Neural Networks Operating on Multispecklegrams

IEEE Sensors Journal, 24(17):27532-27540, September 2024 (article)

Sanchez-Tamayo, N., Yoder, Z., Rothemund, P., Ballardini, G., Keplinger, C., Kuchenbecker, K. J.

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Advanced Science, (2402461):1-14, September 2024 (article)

Tashiro, N., Faulkner, R., Melnyk, S., Rodriguez, T. R., Javot, B., Tahouni, Y., Cheng, T., Wood, D., Menges, A., Kuchenbecker, K. J.

Building Instructions You Can Feel: Edge-Changing Haptic Devices for Digitally Guided Construction

ACM Transactions on Computer-Human Interaction, September 2024 (article) Accepted

Gong, Y.

Engineering and Evaluating Naturalistic Vibrotactile Feedback for Telerobotic Assembly

University of Stuttgart, Stuttgart, Germany, August 2024, Faculty of Design, Production Engineering and Automotive Engineering (phdthesis)

Khojasteh, B., Solowjow, F., Trimpe, S., Kuchenbecker, K. J.

Multimodal Multi-User Surface Recognition with the Kernel Two-Sample Test

IEEE Transactions on Automation Science and Engineering, 21(3):4432-4447, July 2024 (article)

Al-Haddad, H., Guarnera, D., Tamadon, I., Arrico, L., Ballardini, G., Mariottini, F., Cucini, A., Ricciardi, S., Vistoli, F., Rotondo, M. I., Campani, D., Ren, X., Ciuti, G., Terry, B., Iacovacci, V., Ricott, L.

Optimized Magnetically Docked Ingestible Capsules for Non-Invasive Refilling of Implantable Devices

Advanced Intelligent Systems, (2400125):1-21, July 2024 (article)

Matthew, V., Simancek, R. E., Telepo, E., Machesky, J., Willman, H., Ismail, A. B., Schulz, A. K.

Empowering Change: The Role of Student Changemakers in Advancing Sustainability within Engineering Education

Proceedings of the American Society of Engineering Education (ASEE), June 2024, Victoria Matthew and Andrew K. Schulz contributed equally to this publication. (issue) In press

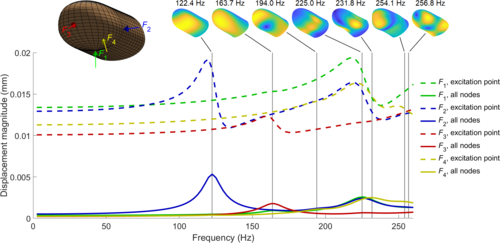

Serhat, G., Kuchenbecker, K. J.

Fingertip Dynamic Response Simulated Across Excitation Points and Frequencies

Biomechanics and Modeling in Mechanobiology, 23, pages: 1369-1376, May 2024 (article)

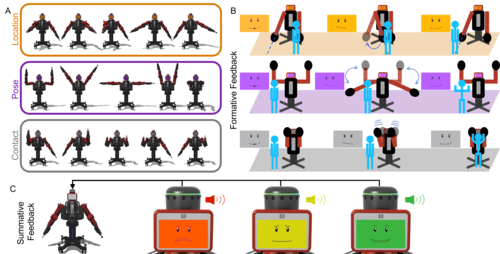

Mohan, M., Nunez, C. M., Kuchenbecker, K. J.

Closing the Loop in Minimally Supervised Human-Robot Interaction: Formative and Summative Feedback

Scientific Reports, 14(10564):1-18, May 2024 (article)

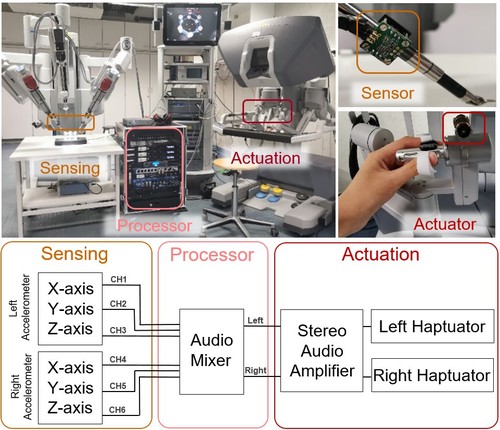

Gong, Y., Husin, H. M., Erol, E., Ortenzi, V., Kuchenbecker, K. J.

AiroTouch: Enhancing Telerobotic Assembly through Naturalistic Haptic Feedback of Tool Vibrations

Frontiers in Robotics and AI, 11, pages: 1-15, May 2024 (article)

Allemang–Trivalle, A., Donjat, J., Bechu, G., Coppin, G., Chollet, M., Klaproth, O. W., Mitschke, A., Schirrmann, A., Cao, C. G. L.

Modeling Fatigue in Manual and Robot-Assisted Work for Operator 5.0

IISE Transactions on Occupational Ergonomics and Human Factors, 12(1-2):135-147, March 2024 (article)

Schulz, A.

Being Neurodivergent in Academia: Autistic and abroad

eLife, 13, March 2024 (article)

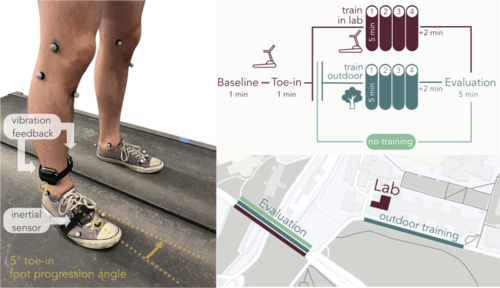

Rokhmanova, N., Pearl, O., Kuchenbecker, K. J., Halilaj, E.

IMU-Based Kinematics Estimation Accuracy Affects Gait Retraining Using Vibrotactile Cues

IEEE Transactions on Neural Systems and Rehabilitation Engineering, 32, pages: 1005-1012, February 2024 (article)

Burns, R.

Creating a Haptic Empathetic Robot Animal That Feels Touch and Emotion

University of Tübingen, Tübingen, Germany, February 2024, Department of Computer Science (phdthesis)

Fitter, N. T., Mohan, M., Preston, R. C., Johnson, M. J., Kuchenbecker, K. J.

How Should Robots Exercise with People? Robot-Mediated Exergames Win with Music, Social Analogues, and Gameplay Clarity

Frontiers in Robotics and AI, 10(1155837):1-18, January 2024 (article)

Khojasteh, B., Shao, Y., Kuchenbecker, K. J.

Robust Surface Recognition with the Maximum Mean Discrepancy: Degrading Haptic-Auditory Signals through Bandwidth and Noise

IEEE Transactions on Haptics, 17(1):58-65, January 2024, Presented at the IEEE Haptics Symposium (article)

2023

L’Orsa, R., Lama, S., Westwick, D., Sutherland, G., Kuchenbecker, K. J.

Towards Semi-Automated Pleural Cavity Access for Pneumothorax in Austere Environments

Acta Astronautica, 212, pages: 48-53, November 2023 (article)

Mohan, M.

Gesture-Based Nonverbal Interaction for Exercise Robots

University of Tübingen, Tübingen, Germany, October 2023, Department of Computer Science (phdthesis)

Ballardini, G., Cherpin, A., Chua, K. S. G., Hussain, A., Kager, S., Xiang, L., Campolo, D., Casadio, M.

Upper Limb Position Matching After Stroke: Evidence for Bilateral Asymmetry in Precision but Not in Accuracy

IEEE Access, 11, pages: 112851-112860, October 2023 (article)

Lagazzi, E., Ballardini, G., Drogo, A., Viola, L., Marrone, E., Valente, V., Bonetti, M., Lee, J., King, D. R., Ricci, S.

The Certification Matters: A Comparative Performance Analysis of Combat Application Tourniquets versus Non-Certified CAT Look-Alike Tourniquets

Prehospital and Disaster Medicine, 38(4):450-455, August 2023 (article)

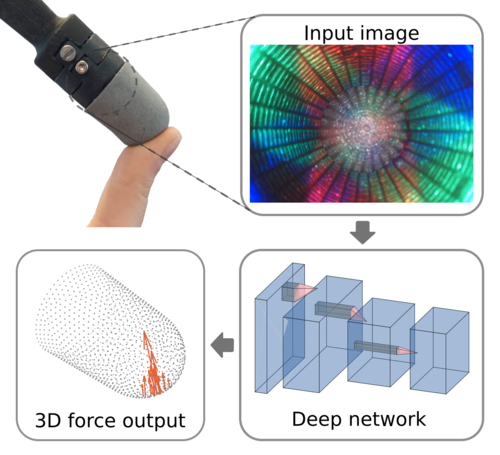

Andrussow, I., Sun, H., Kuchenbecker, K. J., Martius, G.

Minsight: A Fingertip-Sized Vision-Based Tactile Sensor for Robotic Manipulation

Advanced Intelligent Systems, 5(8), August 2023, Inside back cover (article)

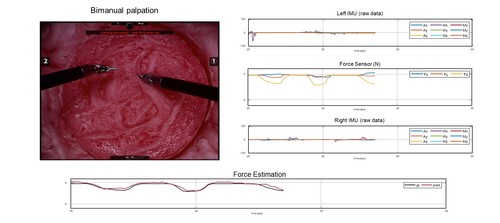

Lee, Y., Husin, H. M., Forte, M., Lee, S., Kuchenbecker, K. J.

Learning to Estimate Palpation Forces in Robotic Surgery From Visual-Inertial Data

IEEE Transactions on Medical Robotics and Bionics, 5(3):496-506, August 2023 (article)

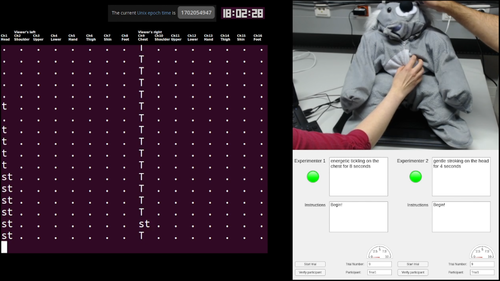

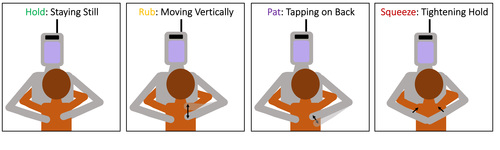

Block, A. E., Seifi, H., Hilliges, O., Gassert, R., Kuchenbecker, K. J.

In the Arms of a Robot: Designing Autonomous Hugging Robots with Intra-Hug Gestures

ACM Transactions on Human-Robot Interaction, 12(2):1-49, June 2023, Special Issue on Designing the Robot Body: Critical Perspectives on Affective Embodied Interaction (article)

Gertler, I., Serhat, G., Kuchenbecker, K. J.

Generating Clear Vibrotactile Cues with a Magnet Embedded in a Soft Finger Sheath

Soft Robotics, 10(3):624-635, June 2023 (article)

Fazlollahi, F., Kuchenbecker, K. J.

Haptify: A Measurement-Based Benchmarking System for Grounded Force-Feedback Devices

IEEE Transactions on Robotics, 39(2):1622-1636, April 2023 (article)

Brown, J. D., Kuchenbecker, K. J.

Effects of Automated Skill Assessment on Robotic Surgery Training

The International Journal of Medical Robotics and Computer Assisted Surgery, 19(2):e2492, April 2023 (article)

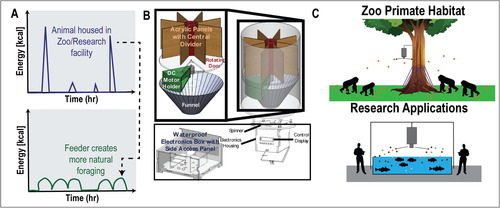

Jadali, N., Zhang, M. J., Schulz, A. K., Meyerchick, J., Hu, D. L.

ForageFeeder: A Low-Cost Open Source Feeder for Randomly Distributed Food

HardwareX, 14(e00405):1-17, March 2023 (article)

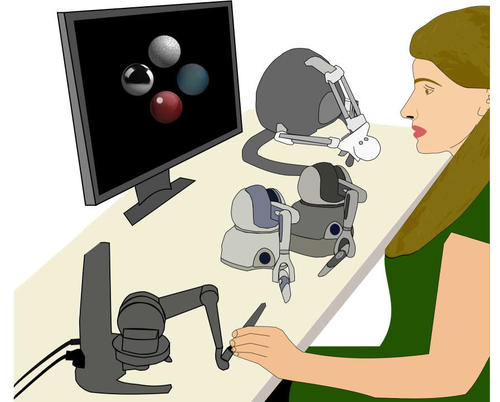

Spiers, A. J., Young, E., Kuchenbecker, K. J.

The S-BAN: Insights into the Perception of Shape-Changing Haptic Interfaces via Virtual Pedestrian Navigation

ACM Transactions on Computer-Human Interaction, 30(1):1-31, March 2023 (article)

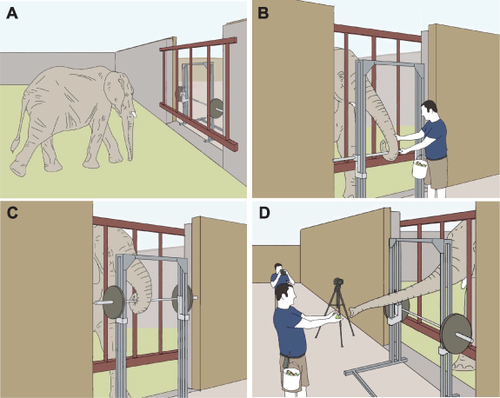

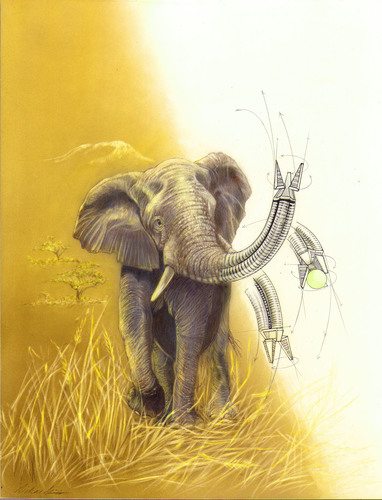

Schulz, A., Reidenberg, J., Wu, J. N., Tang, C. Y., Seleb, B., Mancebo, J., Elgart, N., Hu, D.

Elephant trunks use an adaptable prehensile grip

Bioinspiration and Biomimetics, 18(2), February 2023 (article)

Magondu, B., Lee, A., Schulz, A., Buchelli, G., Meng, M., Kaminski, C., Yang, P., Carver, S., Hu, D.

Drying dynamics of pellet feces

Soft Matter, 19, pages: 723-732, January 2023 (article)

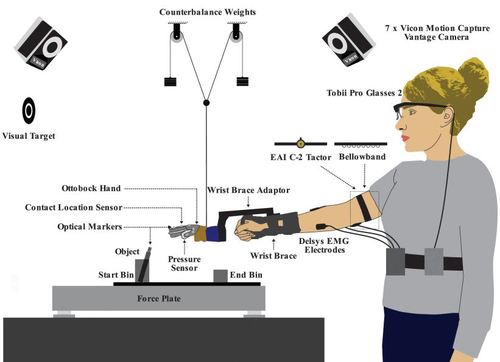

Thomas, N., Fazlollahi, F., Kuchenbecker, K. J., Brown, J. D.

The Utility of Synthetic Reflexes and Haptic Feedback for Upper-Limb Prostheses in a Dexterous Task Without Direct Vision

IEEE Transactions on Neural Systems and Rehabilitation Engineering, 31, pages: 169-179, January 2023 (article)

Lee, H., Sun, H., Park, H., Serhat, G., Javot, B., Martius, G., Kuchenbecker, K. J.

Predicting the Force Map of an ERT-Based Tactile Sensor Using Simulation and Deep Networks

IEEE Transactions on Automation Science and Engineering, 20(1):425-439, January 2023 (article)

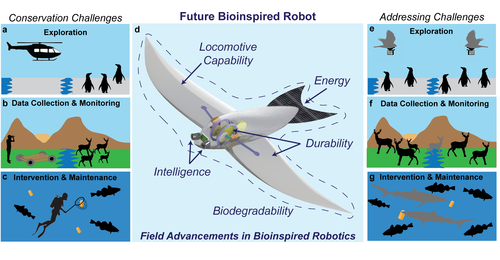

Chellapurath, M., Khandelwal, P., Schulz, A. K.

Bioinspired Robots Can Foster Nature Conservation

Frontiers in Robotics and AI, 10, 2023 (article) Accepted

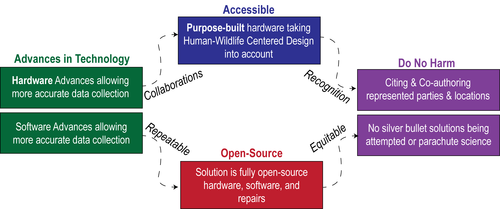

Schulz, A., Shriver, C., Stathatos, S., Seleb, B., Weigel, E., Chang, Y., Bhamla, M. S., Hu, D., III, J. R. M.

Conservation Tools: The Next Generation of Engineering–Biology Collaborations

Royal Society Interface, 2023, Andrew Schulz, Cassie Shriver, Suzanne Stathatos, and Benjamin Seleb are co-first authors. (article)

Schulz, A., Schneider, N., Zhang, M., Singal, K.

A Year at the Forefront of Hydrostat Motion

Biology Open, 2023, N. Schneider, M. Zhang, and K. Singal all contributed equally on this manuscript. (article)

2022

Richardson, B.

Multi-Timescale Representation Learning of Human and Robot Haptic Interactions

University of Stuttgart, Stuttgart, Germany, December 2022, Faculty of Computer Science, Electrical Engineering and Information Technology (phdthesis)

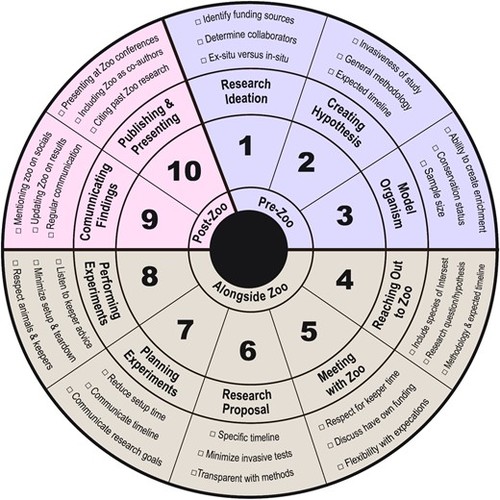

Schulz, A., Shriver, C., Aubuchon, C., Weigel, E., Kolar, M., III, J. M., Hu, D.

A Guide for Successful Research Collaborations between Zoos and Universities

Integrative and Comparitive Biology, 62, pages: 1174-1185, November 2022 (article)

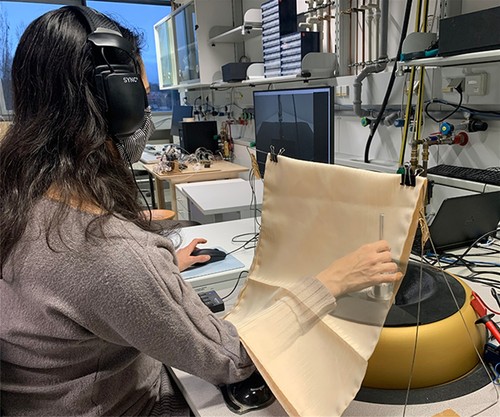

Nam, S.

Understanding the Influence of Moisture on Fingerpad-Surface Interactions

University of Tübingen, Tübingen, Germany, October 2022, Department of Computer Science (phdthesis)

Zruya, O., Sharon, Y., Kossowsky, H., Forni, F., Geftler, A., Nisky, I.

A New Power Law Linking the Speed to the Geometry of Tool-Tip Orientation in Teleoperation of a Robot-Assisted Surgical System

IEEE Robotics and Automation Letters, 7(4):10762-10769, October 2022 (article)

Richardson, B. A., Vardar, Y., Wallraven, C., Kuchenbecker, K. J.

Learning to Feel Textures: Predicting Perceptual Similarities from Unconstrained Finger-Surface Interactions

IEEE Transactions on Haptics, 15(4):705-717, October 2022, Benjamin A. Richardson and Yasemin Vardar contributed equally to this publication. (article)

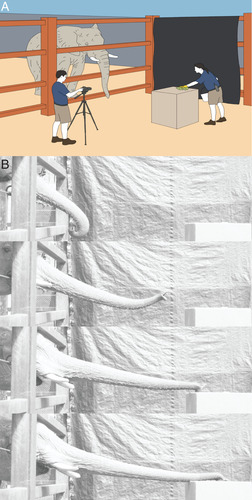

Schulz, A., Boyle, M., Boyle, C., Sordilla, S., Rincon, C., Hooper, S., Aubuchon, C., Reidenberg, J., Higgins, C., Hu, D.

Skin wrinkles and folds enable asymmetric stretch in the elephant trunk

Proceedings of the National Academices of Science , 119, August 2022 (article)

Guo, Y., Serhat, G., Pérez, M. G., Knippers, J.

Maximizing buckling load of elliptical composite cylinders using lamination parameters

Engineering Structures, 262, pages: 114342, July 2022 (article)

Mohebbi, N., Schulz, A., Spencer, T., Pos, K., Mandel, A., Casas, J., Hu, D.

The Scaling of Olfaction: Moths have Relatively More Olfactory Surface Area than Mammals

Integrative and Comparitive Biology, 62(1), July 2022, Nina Mohebbi and Andrew Schulz contributed equally. (article)

Serhat, G., Vardar, Y., Kuchenbecker, K. J.

Contact Evolution of Dry and Hydrated Fingertips at Initial Touch

PLOS ONE, 17(7):e0269722, July 2022, Gokhan Serhat and Yasemin Vardar contributed equally to this publication. (article)

Lee, H., Tombak, G. I., Park, G., Kuchenbecker, K. J.

Perceptual Space of Algorithms for Three-to-One Dimensional Reduction of Realistic Vibrations

IEEE Transactions on Haptics, 15(3):521-534, July 2022 (article)

Seyyedrahmani, F., Shahabad, P. K., Serhat, G., Bediz, B., Basdogan, I.

Multi-objective optimization of composite sandwich panels using lamination parameters and spectral Chebyshev method

Composite Structures, 289(115417), June 2022 (article)

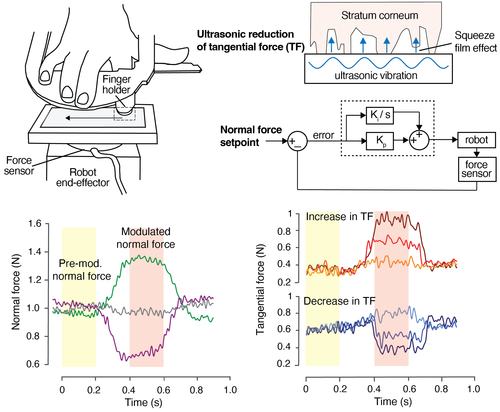

Gueorguiev, D., Lambert, J., Thonnard, J., Kuchenbecker, K. J.

Normal and Tangential Forces Combine to Convey Contact Pressure During Dynamic Tactile Stimulation

Scientific Reports, 12, pages: 8215, May 2022 (article)

Rokhmanova, N., Kuchenbecker, K. J., Shull, P. B., Ferber, R., Halilaj, E.

Predicting Knee Adduction Moment Response to Gait Retraining with Minimal Clinical Data

PLOS Computational Biology, 18(5):e1009500, May 2022 (article)

Forte, M., Gourishetti, R., Javot, B., Engler, T., Gomez, E. D., Kuchenbecker, K. J.

Design of Interactive Augmented Reality Functions for Robotic Surgery and Evaluation in Dry-Lab Lymphadenectomy

The International Journal of Medical Robotics and Computer Assisted Surgery, 18(2):e2351, April 2022 (article)

Burns, R. B., Lee, H., Seifi, H., Faulkner, R., Kuchenbecker, K. J.

Endowing a NAO Robot with Practical Social-Touch Perception

Frontiers in Robotics and AI, 9, pages: 840335, April 2022 (article)