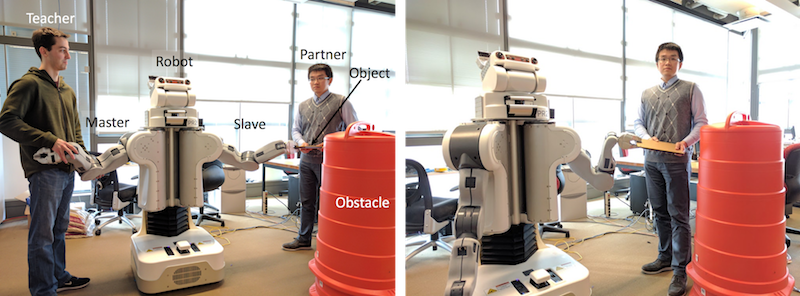

The left image shows the training scenario for collaborative manipulation with a Willow Garage PR2, where the teacher guides the robot to manipulate an object with the partner. The right image shows the testing scenario, where the robot automatically collaborates with the partner in the same task.

Many modern humanoid robots are designed to operate in human environments, like homes and hospitals. If designed well, such robots could help humans accomplish tasks and lower their physical and/or mental workload. As opposed to having an operator devise control policies and reprogram the robot for every new situation it encounters, learning from demonstration (LfD) provides a direct method for users to program robots.

We present a hierarchical structure of task-parameterized models for learning from demonstration: demonstrations from each situation are encoded in the same model, and models are combined to create a skill library. This structure has four advantages over the standard approach of encoding all demonstrations together: users can easily add or delete existing robot skills, robots can choose the most relevant skill to use for new tasks, robots can estimate the difficulty of these new test situations, and robot designers can apply domain knowledge to design better generalization behaviors.

We elucidate the proposed structure via application to a collaborative task where a robot arm moves an object with a human partner, as shown in the figure above. The demonstrations were collected for three situations using a novel kinesthetic teleoperation method [ ] and were encoded using task-parameterized Gaussian mixture models (TP-GMM). Naive participants then collaborated with the robot while it was controlled by a passive model with only gravity compensation, a single TP-GMM encoding all demonstrations, and the proposed structure. Our method achieved significantly better task performance and subjective ratings in most tested scenarios.