Publication Type

- Article (135)

- Book Chapter (2)

- Conference Paper (158)

- MPI Year Book (2)

- Master Thesis (7)

- Miscellaneous (163)

- Patent (6)

- Ph.D. Thesis

2024

Creating a Haptic Empathetic Robot Animal That Feels Touch and Emotion

2024

Burns, R.

Creating a Haptic Empathetic Robot Animal That Feels Touch and Emotion

University of Tübingen, Tübingen, Germany, February 2024, Department of Computer Science (phdthesis)

2023

Gesture-Based Nonverbal Interaction for Exercise Robots

2023

Mohan, M.

Gesture-Based Nonverbal Interaction for Exercise Robots

University of Tübingen, Tübingen, Germany, October 2023, Department of Computer Science (phdthesis)

2022

Multi-Timescale Representation Learning of Human and Robot Haptic Interactions

2022

Richardson, B.

Multi-Timescale Representation Learning of Human and Robot Haptic Interactions

University of Stuttgart, Stuttgart, Germany, December 2022, Faculty of Computer Science, Electrical Engineering and Information Technology (phdthesis)

Understanding the Influence of Moisture on Fingerpad-Surface Interactions

Nam, S.

Understanding the Influence of Moisture on Fingerpad-Surface Interactions

University of Tübingen, Tübingen, Germany, October 2022, Department of Computer Science (phdthesis)

2021

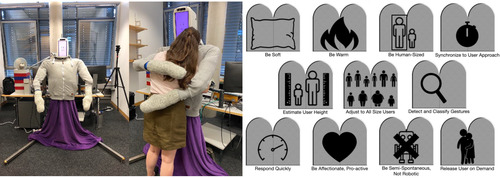

HuggieBot: An Interactive Hugging Robot With Visual and Haptic Perception

2021

Block, A. E.

HuggieBot: An Interactive Hugging Robot With Visual and Haptic Perception

ETH Zürich, Zürich, August 2021, Department of Computer Science (phdthesis)

2020

Delivering Expressive and Personalized Fingertip Tactile Cues

2020

Young, E. M.

Delivering Expressive and Personalized Fingertip Tactile Cues

University of Pennsylvania, Philadelphia, PA, December 2020, Department of Mechanical Engineering and Applied Mechanics (phdthesis)

Modulating Physical Interactions in Human-Assistive Technologies

Hu, S.

Modulating Physical Interactions in Human-Assistive Technologies

University of Pennsylvania, Philadelphia, PA, August 2020, Department of Mechanical Engineering and Applied Mechanics (phdthesis)

2018

Instrumentation, Data, and Algorithms for Visually Understanding Haptic Surface Properties

2018

Burka, A. L.

Instrumentation, Data, and Algorithms for Visually Understanding Haptic Surface Properties

University of Pennsylvania, Philadelphia, USA, August 2018, Department of Electrical and Systems Engineering (phdthesis)

2017

Design and Evaluation of Interactive Hand-Clapping Robots

Naomi T. Fitter

University of Pennsylvania, August 2017, Department of Mechanical Engineering and Applied Mechanics (phdthesis)

2017

Naomi T. Fitter

Design and Evaluation of Interactive Hand-Clapping Robots

University of Pennsylvania, August 2017, Department of Mechanical Engineering and Applied Mechanics (phdthesis)